Markov Chain: Types, Properties and Applications

Markov chains have many applications, ranging from modeling communication networks to analyzing stock prices. They are particularly useful in modeling systems that have a finite number of states and transitions between those states and can be used to analyze and predict the long-term behaviour of such systems.

Author: Somya Dipayan

In this article, we will be discussing the Markov chain and its applications.

Table of Contents

- What is a Markov chain?

- Types of Markov chain

- Properties of Markov chain

- Applications

- Markov chain in Python

- Advantages and disadvantages

What is Markov Chain?

Markov chain refers to mathematical system that undergoes transitions from one state to other as per some probabilistic rules. The defining characteristic of this chain is that no matter how system arrived at its current state, the possible future states are fixed. The probability of transitioning to any particular state relies solely on current state and time elapsed.

For example, consider a weather model that predicts the weather for a particular region over the next week. Each day, the weather can be either sunny, cloudy, or rainy. We can represent the weather for each day as a state in the Markov chain. If we know that the weather today is sunny, we can use the probabilistic rules of the Markov chain to predict the weather tomorrow. For example, if the weather has a 60% chance of being sunny, a 30% chance of being cloudy, and a 10% chance of being rainy, then we can say that there is a 60% chance that the weather will be sunny tomorrow.

Explore deep learning courses

Best-suited Deep Learning and Neural Networks courses for you

Learn Deep Learning and Neural Networks with these high-rated online courses

Types of Markov Chain

There are several different types of Markov chains, including:

- Finite Markov chains: These are Markov chains with a finite number of states. The transition probabilities between states are fixed, and system will then eventually reach a steady state in which the probabilities of being in each state become constant. An example of a finite state Markov chain might be a model of a traffic light, with three states (red, yellow, green) and transitions governed by the rules of the traffic light.

- Infinite Markov chains: These are Markov chains with an infinite number of states. The transition probabilities between states are fixed, but the system may not reach a steady state. For example, a model of the spread of a virus through a population. The states of the Markov chain represent the number of people who have been infected at any given time, and the transitions between states are governed by the rate of infection and the rate of recovery.

- Continuous-time Markov chains (CTMC): These are Markov chains in which the transitions between states occur at random times rather than at discrete time intervals. The transition probabilities are defined by rate functions rather than probabilities. For example, the state of a CTMC could represent the number of customers in a store at a given time, and the transitions between states could represent the arrival and departure of customers. The probability of a system being in a particular state (e.g., the probability that there are a certain number of customers in the store) can be calculated using the CTMC model.

- Discrete-time Markov chains (DTMC): These are Markov chains in which the transitions between states occur at discrete time intervals. The transition probabilities are defined by a transition matrix. For example, the state of a DTMC could represent the weather on a particular day (e.g., sunny, cloudy, or rainy), and the transitions between states could represent the change in weather from one day to the next. The probability of a system being in a particular state (e.g., the probability of it being sunny on a particular day) can be calculated using the DTMC model.

Properties of Markov Chain

There are also several properties that Markov chains can have, including:

- Irreducibility: Markov chain is irreducible when it is possible to reach any state from any other state in a finite number of steps.

- Aperiodicity: A Markov chain is aperiodic when it is possible to reach any state from any other state in a finite number of steps, regardless of the starting state.

- Recurrence: A state in a Markov chain is recurrent if it is possible to return to that state in a finite number of steps.

- Transience: A state in a Markov chain is transient if it is not possible to return to that state in a finite number of steps.

- Ergodicity: A Markov chain is ergodic if it is both irreducible and aperiodic and if the long-term behaviour of the system is independent of the starting state.

- Reversibility: A Markov chain is reversible if probability of transitioning from one state to another is equal to the probability of transitioning from that state back to the original state.

Applications of Markov Chains

One common application of Markov chains is in the field of economics, where they can be used to model the behaviour of stock prices. In this case, the possible states of the system might represent upward or downward trends in the market, and the transitions between states might be influenced by various economic factors such as interest rates and the performance of individual stocks. By analyzing the probability of transitioning between different states, it is possible to make predictions about the future behaviour of the market.

Markov chains can be used for modeling biological systems, such as the spread of a disease through a population. In such case, the states of the system might represent the number of individuals in a population who are susceptible, infected, or immune to the disease, and the transitions between states might be influenced by several factors such as the rate of infection and the effectiveness of a vaccine. By analyzing the probability of transitioning between different states, it is possible to make predictions about the future spread of the disease and the effectiveness of various control measures.

In order to analyze and predict the behaviour of a Markov chain, it is necessary to define the states of the system and the transitions between those states, as well as the probability of transitioning between each pair of states. This information can be represented using a transition matrix, which is a matrix of numbers representing the probability of transitioning from one state to another.

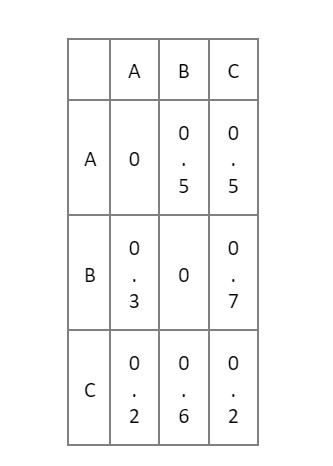

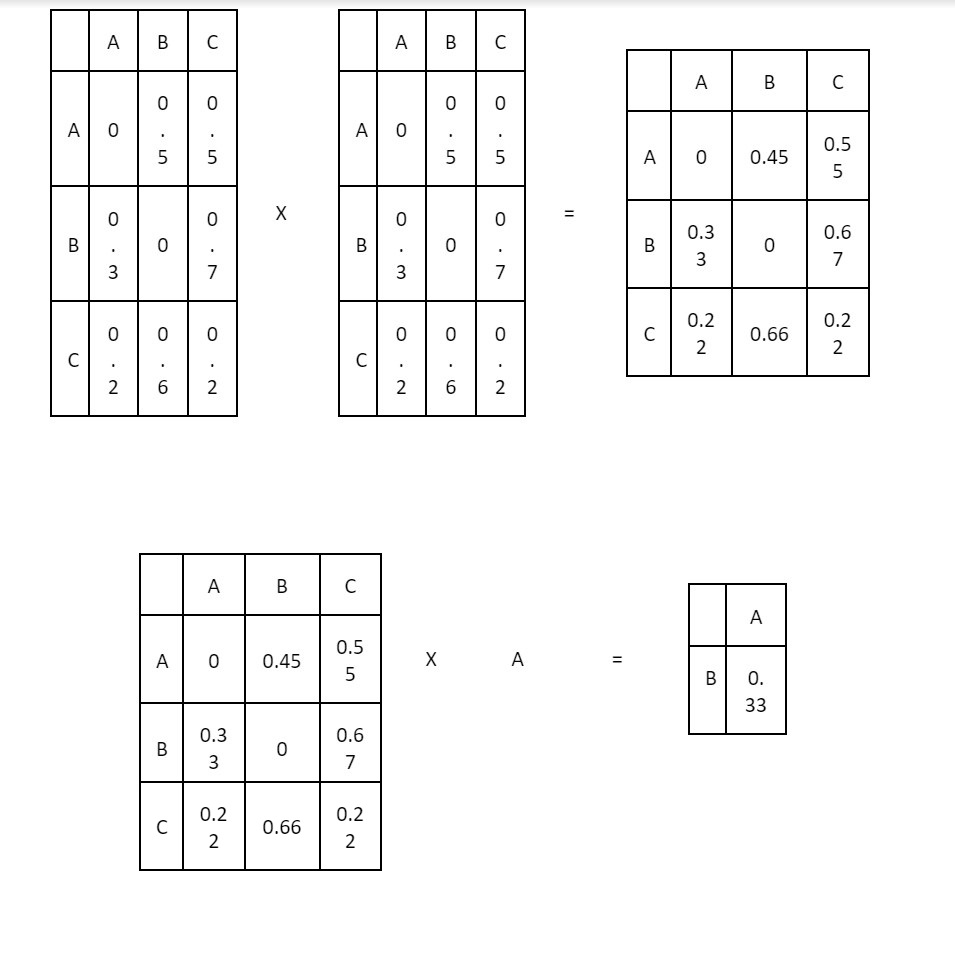

For example, consider a simple Markov chain with three states A, B, and C, and the following transition matrix:

This matrix represents the probability of transitioning from one state to another. For example, the value in the second row and third column (0.7) represents the probability of transitioning from state B to state C.

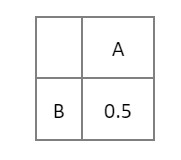

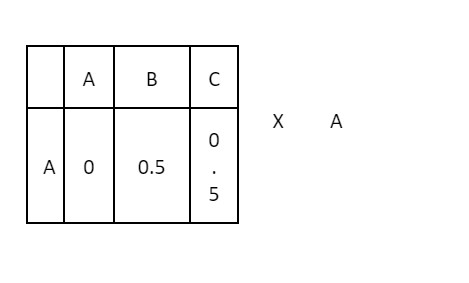

In order to analyse the behaviour of this Markov chain over time, it is necessary to define a starting state and calculate the probabilities of transitioning to other states at each time step. This can be done using matrix multiplication. For example, if the starting state is A, the probability of being in state B after one time step can be calculated as follows:

This is obtained by multiplying the transition matrix by the starting state vector:

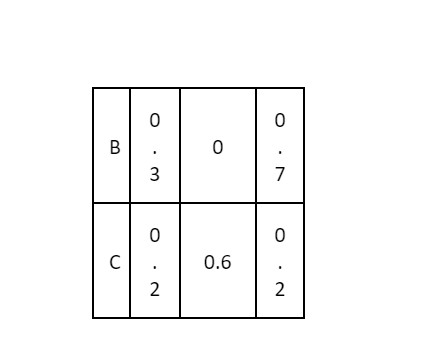

To calculate the probability of being in state B after two-time steps, we can simply multiply the transition matrix by itself and then multiply the result by the starting state vector:

This process can be repeated to calculate the probabilities of being in each state at any time step.

One important property of Markov chains is that they will eventually reach a steady state, where the probabilities of being in each state no longer change. This steady state can be calculated using matrix multiplication as well, by finding the eigenvectors of the transition matrix and normalizing them. The resulting vector represents the long-term behaviour of the Markov chain.

Markov chains have many other properties and applications, and there are many algorithms and techniques for analysing and manipulating them. They are a useful tool for modelling and analysing systems with a finite number of states and transitions between those states, and have many applications in fields such as economics, biology, and computer science.

Markov Chain in Python

Here is an example of a simple Python function that implements a Markov chain:

def markov_chain(transition_matrix, state, num_steps): """ Perform `num_steps` transitions of a Markov chain with transition matrix `transition_matrix` starting from state `state`. """ for i in range(num_steps): state = np.random.choice(len(transition_matrix), p=transition_matrix[state]) return state

This function takes as input a transition matrix and a starting state, and performs a specified number of transitions according to the probabilities in the transition matrix. It uses the ‘np.random.choice’ function from the NumPy library to select the next state according to the probabilities in the transition matrix.

Here is an example of how this function could be used to simulate a Markov chain with three states:

transition_matrix = [[0, 0.5, 0.5], [0.3, 0, 0.7], [0.2, 0.6, 0.2]]state = 0num_steps = 5

markov_chain(transition_matrix, state, num_steps)

This would perform 5 transitions of the Markov chain starting from state 0, using the transition matrix defined above. The function would return the final state after the 5 transitions.

Explore free Python courses

Advantages and Disadvantages of Markov Chain

A Markov chain undergoes transitions from one state to another as per specific probabilistic rules. The defining characteristic of a Markov chain is that no matter how the system arrived at its current state, the possible future states are fixed. This specific kind of memory-lessness is known as the “Markov property”.

Some advantages of Markov chains include:

- They can be used to model and analyse a wide variety of systems in many different fields, including economics, biology, and computer science.

- They are relatively simple to understand and use, making them a popular choice for modelling complex systems.

- They can be used to predict the long-term behaviour of a system, even if the system is subject to random fluctuations in the short term.

Some disadvantages of Markov chains include:

- They are only able to model systems that exhibit the Markov property, which means that the future state of the system is dependent only on the current state and not on the sequence of events that led to the current state.

- They can be computationally intensive, especially for large systems or systems with many possible states.

- They may not accurately capture the behaviour of systems that exhibit complex dependencies or long-range correlations.

- They may not be able to capture the full complexity of real-world systems, which can have many interacting variables and non-linear relationships.

Conclusion

In summary, Markov chains are a useful tool for modeling and analysing systems with a finite number of states and transitions among those states. They have many applications in fields such as economics, biology, and computer science, and can be represented using transition matrices or directed graphs. By analysing the probabilities of transitioning between different states, it is possible to make predictions about the long-term behaviour of the system and to understand the underlying dynamics.

Explore free online courses with certificates

_______________

Recently completed any professional course/certification from the market? Tell us what you liked or disliked in the course for more curated content.

Click here to submit its review.

FAQs

What is a Markov chain?

A Markov chain is the mathematical system that undergoes transitions from one state to another according to certain probabilistic rules. It is a type of stochastic process, meaning it involves a level of randomness.

How does a Markov chain work?

A Markov chain is made up of a set of states and transitions between those states. Probability of transitioning from one state to other is determined by the probabilities associated with the transitions. These probabilities are typically represented in a transition matrix.

What are some common uses for Markov chains?

Markov chains have a wide range of applications, including modelling random processes in physics, chemistry, biology, economics, and computer science. They are often used to model systems that exhibit temporal dependencies, where the future state of the system depends on its past states.

How do you solve a Markov chain?

There are several techniques for solving Markov chains, including finding the steady-state distribution, analysing the eigenvalues and eigenvectors of the transition matrix, and using Monte Carlo methods. The appropriate method to use will depend on the specific characteristics of the Markov chain being analysed.

What are some limitations of Markov chains?

One limitation of Markov chains is that they assume that the future state of the system only relies on current state, and not on any past states beyond the current one. This is called "memoryless" property of Markov chains. In some situations, this assumption may not hold true and the model may not accurately represent the system being modelled.

Can a Markov chain have more than one steady-state distribution?

Yes, it is possible for a Markov chain to have more than one steady-state distribution. This occurs when the Markov chain is "reducible," meaning that it is possible to partition the states into multiple subsets such that within each subset, the states communicate with each other, but there are no transitions between states in different subsets. In this case, each subset will have its own steady-state distribution.

Is it possible for a Markov chain to be periodic?

Yes, it is possible for a Markov chain to be periodic, meaning that it will return to the same state after a certain number of steps. This occurs when the transition matrix has an eigenvalue of 1 with an associated eigenvector that is not a multiple of the all-ones vector.

Can a Markov chain have more than one absorbing state?

Yes, it is possible for a Markov chain to have more than one absorbing state. The absorbing state is a state that when once reached, cannot be left. In other words, all transitions from an absorbing state have a probability of 1. If a Markov chain has multiple absorbing states, it means that there are multiple states that, once reached, the system will remain in indefinitely.

This is a collection of insightful articles from domain experts in the fields of Cloud Computing, DevOps, AWS, Data Science, Machine Learning, AI, and Natural Language Processing. The range of topics caters to upski... Read Full Bio