Spam Filtering using Bag of Words

The purpose of this blog is to explain you the concept of spam filtering using bag of words.

We daily receive many emails, some of which are spam and useless to us. So, what if we can make a spam filtering system using text classification that classifies whether the email, we have received is Spam or not? That will save us a lot of time. Sounds cool? Let’s jump into spam filtering using a bag of words.

In this post we will discuss: –

- How Natural language data is processed so that it can be inserted into machine learning or deep learning algorithms

- What is Bag of Words

- Implementation of Bag of Words

- Problem Statement: Spam Filtering

- Pros and Cons of Bag of Words

- Next Steps after Bag of Words

How Natural Language Data is Processed?

Have you ever wondered how Google and YouTube understand what you are trying to search for? So, a very simple answer to this question is Natural Language Processing so now your question is what is Natural Language Processing or NLP?

It is the domain of Artificial Intelligence where we humans help Computers understand what we are trying to say and when I say Computers, the only prerequisite for Computers to Understand anything is, they need Numbers, just raw Numbers.

So now our first question will be how we can create numbers out of our natural language data.

To understand the problem let’s take a scenario. If you want to go and buy a brand-new mobile phone, then the first thing you will do is you’ll check reviews regarding mobile phones.

So, say you have read 3 reviews about a particular phone: –

- R1 : Awesome camera a must buy

- R2 : Good quality screen and camera

- R3 : Overheating issues

Now in actual cases, you will have Hundreds or maybe Thousands of reviews about a single phone. Now it will be difficult for a Human to read it all and make a decision out of it whether you should buy it or not.

So now comes NATURAL LANGUAGE PROCESSING for your rescue but the only prerequisite is – For doing any processing of natural language on computers they need numbers.

Best-suited Python for data science courses for you

Learn Python for data science with these high-rated online courses

What is Bag of Words?

So, the process of converting text to a group/vector of numbers is called Vectorisation.

So, our very first algorithm for this purpose of vectorisation is Bag of Words, and in this basically, we will count how many times a word appears in a sentence so that sentence can be converted to a vector.

Steps for doing Bag of Words: –

1. Calculate all the unique words present in the set of sentences you are having.

a. Set of sentences in natural language lingo is called corpus.

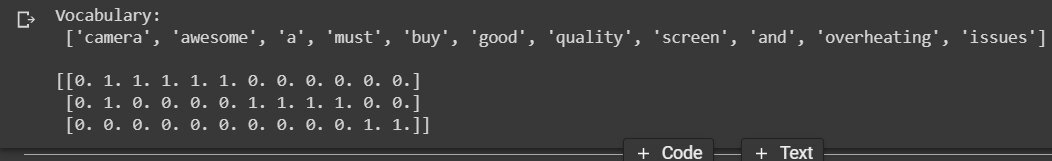

b. In our case we have 3 reviews and we have and a total of 11 unique words from them

| camera | Awesome | a | must | buy | Good | quality | screen | and | Overheating | issues |

2. Calculate how many times a word appears in a review.

| Awesome | camera | a | must | buy | Good | quality | screen | and | Overheating | issues | |

| R1 | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| R2 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 0 | 0 |

| R3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 |

So now reviews have been converted to an appropriate form so that a Computer can process it and tell you whether you should buy this mobile phone or not.

Now reviews are now looking like this: –

R1 = [ 1,1,1,1,1,0,0,0,0,0,0 ]

R2 = [ 0,1,0,0,0,1,1,1,1,0,0 ]

R3 = [ 0,0,0,0,0,0,0,0,0,1,1 ]

And this is the complete idea of Bag of words.

Implementation of Bag of Words (BoW)

Before starting with the implementation of BoW, let’s revise some definitions

1. Corpus = Set of all sentences.

2. Vectorisation = Process of converting natural language sentences to a vector.

Now let’s jump to the implementation part. We’ll use Keras Tokenizer for this purpose.

And you’ll get the following output: –

And this output is what we expected. Notice that the first column of every vector is ZERO. This detail is limited to Keras library so we can ignore it.

Problem Statement: Spam Filtering

Now to understand how Bag of Words works and how we can make a Spam filter using it, we will first cover some basic analogies, and after going through the complete problem statement, you will understand: –

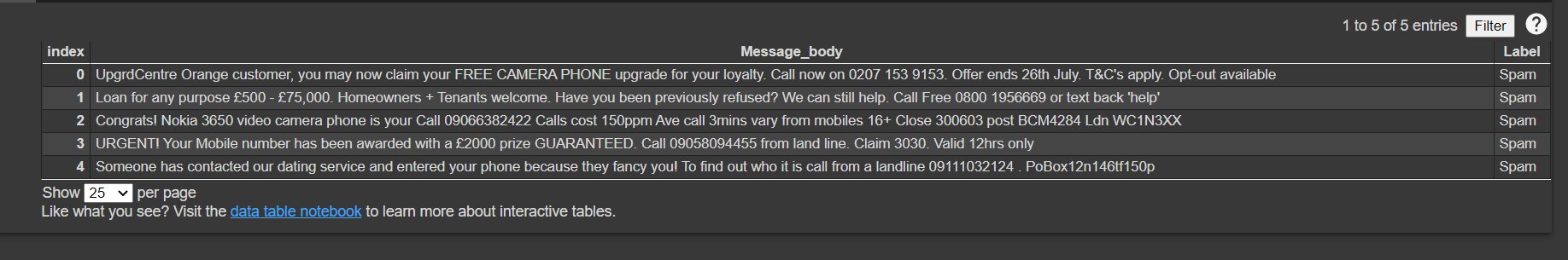

How our data is looking: –

In our training dataset, we have 2 columns: –

1. “Message_body” → Actual data on which we will train our Bag of words, model

2. “Label” → It has values “Spam” and “Not-spam.”

Test data has only 1 column: –

1. “Message_body” → Text on which we have to predict whether it is spam or not.

What is Tokenization?

To understand what Tokenization is. Let’s take an example: –

“James has a cat and a dog. “

Now this sentence is made up of words and these words are called Tokens the process of converting a text too small useful tokens is called Tokenization.

But your question can be Why it is even Important: –

If I say these 2 sentences: –

- “James having cat dog.”

- “James is having a cat and a dog “

Both of these sentences will frame nearly the same picture in your mind that “James is having a cat and a dog” so this is the power of tokenization. Tokens are building blocks of any text and if we input good tokens into Machine Learning Algorithms, we will get good results.

Tokenization can be done both at the word level and sentence level. We can either split a paragraph into small token sentences or we can split a sentence into small token words.

Example code: –

# importing librariesimport nltk# importing whitespace tokenizerfrom nltk.tokenize import WhitespaceTokenizer#creating object of classto = WhitespaceTokenizer()text = to.tokenize("He is playing cricket")print(text)# output is ['He', 'is', 'playing', 'cricket']

What is Text Pre-processing

Text pre-processing is the series of steps that we take to clean our data before feeding it into a machine learning algorithm. There are many techniques for text processing and for this problem we will apply a few of them to our text data.

1. Removal of Punctuations

2. Lowering the text

3. Removal of Stopwords

4. Stemming

1. Removal of Punctuations

Remove all the punctuation marks from a sentence. Punctuation marks are: –

#library that contains punctuationimport stringprint(string.punctuation)# output → !”#$%&'()*+,-./:;<=>?@[]^_`{|}

2. Lowering the text

Lower all the text before giving it to the Bag of Words model. The reason for this is because if we do not lower the text, we will have more words in our vocabulary differentiated just on the basis of the casing and hence unnecessarily longer input vectors to

BOW model.

- If not lowering text: –

■ Example → “I have a big cat, small cat, dog, and a small Dog”

■ Our tokens will be: –

#importing nlp libraryimport nltkfrom nltk.tokenize import WhitespaceTokenizertk = WhitespaceTokenizer()text = tk.tokenize("I have a big Cat a small Cat a Dog and a small Dog")print(text) # output is ['I', 'have', 'a', 'big', 'Cat', 'a', 'small', 'cat', 'a', 'dog', 'and', 'a', 'small', 'Dog']

Now we have these (Cat, cat) and (Dog, dog) tokens. These pairs of tokens are representing the same words but if we will not lower the text, we will have these unnecessary tokens. That is why it is important to lower text before inputting it into the Bag of Words model.

3. Removal of Stopwords

Before going to the removal of stopwords Let’s understand what are stop words.

Stopwords = Words that are very frequent in a sentence and do not add any specific context to that sentence.

So, it’s better to remove these stopwords from our sentences.

And It is not mandatory to use only the list of stopwords from a specific library. In some cases, we have to create our own set of words looking at our corpus

import nltknltk.download('stopwords')#Stop words present in the librarystopwords = nltk.corpus.stopwords.words('english')print(stopwords)# ['i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', "you're", "you've", "you'll", "you'd", 'your'......]

4. Stemming

It is a text standardisation technique where a word is reduced to its stem/base word. Example: “playing” → “play” and “kicking” → “kick”. The main aim for stemming is that we can reduce the vocab size before inputting it into the BOW model.

# importing nlp libraryimport nltkfrom nltk.tokenize import WhitespaceTokenizer #importing the Stemming function from nltk libraryfrom nltk.stem.porter import PorterStemmertk = WhitespaceTokenizer() #defining the object for stemmingporter_stemmer = PorterStemmer() text = tk.tokenize("I am playing cricket")text = [porter_stemmer.stem(word) for word in text]print(text)# stemmed output: - ['I', 'am', 'play', 'cricket']

The Disadvantage of stemming is sometimes, after the stemming word loses its meaning.

Example: – “copying” → “copi” and there is no word “copi” in English vocab.

So these were all text pre-processing steps we’ll take before inputting our data into our Bag of Words model.

How to Apply Bag of Words using Sklearn

Now that we have covered the basics of text pre-processing let’s apply it along with inputting our data to the BoW model and then finally make a complete Spam Classifier for Emails.

1. Reading our Training and Testing data and Setting All Our Imports

\n \n \n \n <span style="color: #ff7700; font-weight: bold;">\n \n \n \n import numpy \n \n \n \n <span style="color: #ff7700; font-weight: bold;">\n \n \n \n as np\n \n \n \n \n \n \n \n <span style="color: #ff7700; font-weight: bold;">\n \n \n \n import pandas \n \n \n \n <span style="color: #ff7700; font-weight: bold;">\n \n \n \n as pd\n \n \n \n df \n \n \n \n <span style="color: #66cc66;">\n = pd. \n <span style="color: black;">\n read_csv( \n <span style="color: #483d8b;">\n 'train-dataset.csv' \n <span style="color: black;">\n ) \n \n \n <span style="color: #808080; font-style: italic;">\n # shuffling all our data \n df \n <span style="color: #66cc66;">\n = df. \n <span style="color: black;">\n sample(frac \n <span style="color: #66cc66;">\n = \n <span style="color: #ff4500;">\n 1 \n <span style="color: black;">\n ) \n \n \n <span style="color: #808080; font-style: italic;">\n # reading only Message_body and label column \n df \n <span style="color: #66cc66;">\n = df \n <span style="color: black;">\n [[ \n <span style="color: #483d8b;">\n 'Message_body' \n <span style="color: #66cc66;">\n , \n <span style="color: #483d8b;">\n 'Label' \n <span style="color: black;">\n ]] \n \n \n <span style="color: #808080; font-style: italic;">\n # reading our test data \n df_test \n <span style="color: #66cc66;">\n = pd. \n <span style="color: black;">\n read_csv( \n <span style="color: #483d8b;">\n 'test-dataset.csv' \n <span style="color: #66cc66;">\n ,encoding \n <span style="color: #66cc66;">\n = \n <span style="color: #483d8b;">\n 'cp1252' \n <span style="color: black;">\n ) \n df_test. \n <span style="color: black;">\n head() \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: #66cc66;"> \n </span style="color: #66cc66;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: #66cc66;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: #ff4500;"> \n </span style="color: #66cc66;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #66cc66;">\n \n \n \n </span style="color: #ff7700; font-weight: bold;">\n \n \n \n </span style="color: #ff7700; font-weight: bold;">\n \n \n \n </span style="color: #ff7700; font-weight: bold;">\n \n \n \n </span style="color: #ff7700; font-weight: bold;">

2. Text Preprocessing

a. Removal of Punctuation

#library that contains punctuationimport string # list of all punctuations we haveprint(string.punctuation) #defining the function to remove punctuationdef remove_punctuation(text): punctuationfree="".join([i for i in text if i not in string.punctuation]) return punctuationfree #storing the punctuation free text for both training and testing datadf['clean_msg']= df['Message_body'].apply(lambda x:remove_punctuation(x))df_test['clean_msg'] = df_test['Message_body'].apply(lambda x:remove_punctuation(x))

b. Lower the Text

df['clean_msg']= df['clean_msg'].apply(lambda x: x.lower())df_test['clean_msg']= df_test['clean_msg'].apply(lambda x: x.lower())

c. Remove StopWords

#defining function for tokenizationimport re #whitespace tokenizer from nltk from nltk.tokenize import WhitespaceTokenizer def tokenization(text): tk = WhitespaceTokenizer() return tk.tokenize(text) #applying function to the column for making tokens in both Training and Testing datadf['tokenised_clean_msg']= df['clean_msg'].apply(lambda x: tokenization(x)) df_test['tokenised_clean_msg'] = df_test['clean_msg'].apply(lambda x: tokenization(x))

d. Stemming

#importing the Stemming function from nltk libraryfrom nltk.stem.porter import PorterStemmer#defining the object for stemmingporter_stemmer = PorterStemmer() #defining a function for stemmingdef stemming(text): stem_text = [porter_stemmer.stem(word) for word in text] return stem_text # applying function for stemmingdf['cleaned_tokens']=df['cleaned_tokens'].apply(lambda x: stemming(x))df_test['cleaned_tokens']=df_test['cleaned_tokens'].apply(lambda x: stemming(x))

After stemming we have tokens of text so we will join our tokens back again so that we can input them to our Bag of Words model

def join_text(text): return " ".join(text) # join text because till now we have tokensdf['final_txt'] = df['cleaned_tokens'].apply(lambda x : join_text(x))df_test['final_txt'] = df_test['cleaned_tokens'].apply(lambda x : join_text(x))And our predictor column is categorical in nature so let’s convert our categorical column back to Numeric values.

# extracting only useful columnsdf = df[['final_txt','Label']] df_test = df[['final_txt',"Label"]] # as our target variable is categorical it is important to convert our categorical variable to Numeric variable# Non-Spam --> 0# SPam --> 1 final_dict = {'Non-Spam':0,'Spam':1}df['Label'] = df['Label'].map(final_dict)df_test['Label'] = df_test['Label'].map(final_dict)

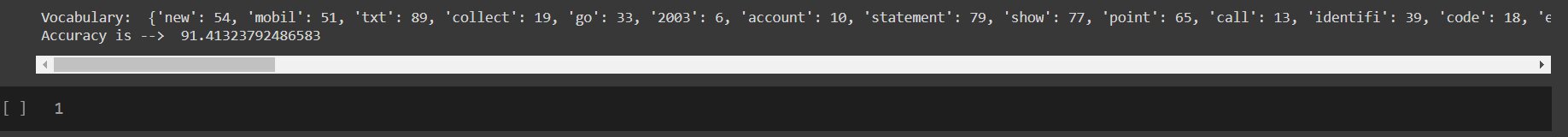

3. Applying Bag of Words model using Sklearn

\n \n \n \n <span style="color: #808080; font-style: italic;">\n \n \n \n # importing our Bag of Words model from sklearn\n \n \n \n \n \n \n \n <span style="color: #ff7700; font-weight: bold;">\n \n \n \n from sklearn.\n \n \n \n <span style="color: black;">\n \n \n \n feature_extraction.\n \n \n \n <span style="color: black;">\n \n \n \n text \n \n \n \n <span style="color: #ff7700; font-weight: bold;">\n import CountVectorizer \n \n train_documents_for_bow \n <span style="color: #66cc66;">\n = df \n <span style="color: black;">\n [ \n <span style="color: #483d8b;">\n 'final_txt' \n <span style="color: black;">\n ]. \n <span style="color: black;">\n tolist() \n \n test_docs \n <span style="color: #66cc66;">\n = df_test \n <span style="color: black;">\n [ \n <span style="color: #483d8b;">\n 'final_txt' \n <span style="color: black;">\n ]. \n <span style="color: black;">\n tolist() \n \n \n <span style="color: #808080; font-style: italic;">\n # Create a BoW model Object \n vectorizer \n <span style="color: #66cc66;">\n = CountVectorizer \n <span style="color: black;">\n (max_features \n <span style="color: #66cc66;">\n = \n <span style="color: #ff4500;">\n 100 \n <span style="color: black;">\n ) \n \n \n <span style="color: #808080; font-style: italic;">\n # fitting our Bag of Words Model \n vectorizer. \n <span style="color: black;">\n fit(train_documents_for_bow \n <span style="color: black;">\n ) \n \n \n <span style="color: #808080; font-style: italic;">\n # Printing the identified Unique words along with their indices \n \n <span style="color: #ff7700; font-weight: bold;">\n print \n <span style="color: black;">\n ( \n <span style="color: #483d8b;">\n "Vocabulary: " \n <span style="color: #66cc66;">\n , vectorizer. \n <span style="color: black;">\n vocabulary_) \n \n \n <span style="color: #808080; font-style: italic;">\n # Encode the Document \n X_train \n <span style="color: #66cc66;">\n = vectorizer. \n <span style="color: black;">\n fit_transform(train_documents_for_bow \n <span style="color: black;">\n ) \n \n \n <span style="color: #808080; font-style: italic;">\n # Naive Bayes for Classification { Spam or Not-Spam } \n \n <span style="color: #ff7700; font-weight: bold;">\n from sklearn. \n <span style="color: black;">\n naive_bayes \n <span style="color: #ff7700; font-weight: bold;">\n import GaussianNB \n \n classifier \n <span style="color: #66cc66;">\n = GaussianNB \n <span style="color: black;">\n () \n \n classifier. \n <span style="color: black;">\n fit(X_train. \n <span style="color: black;">\n toarray() \n <span style="color: #66cc66;">\n , df \n <span style="color: black;">\n [ \n <span style="color: #483d8b;">\n 'Label' \n <span style="color: black;">\n ]) \n X_test \n <span style="color: #66cc66;">\n = vectorizer. \n <span style="color: black;">\n transform(test_docs \n <span style="color: black;">\n ) \n \n \n <span style="color: #808080; font-style: italic;">\n # Predict Class \n y_pred \n <span style="color: #66cc66;">\n = classifier. \n <span style="color: black;">\n predict(X_test. \n <span style="color: black;">\n toarray()) \n \n <span style="color: #808080; font-style: italic;">\n # Accuracy \n \n <span style="color: #ff7700; font-weight: bold;">\n from sklearn. \n <span style="color: black;">\n metrics \n <span style="color: #ff7700; font-weight: bold;">\n import accuracy_score \n accuracy \n <span style="color: #66cc66;">\n = accuracy_score \n <span style="color: black;">\n (df_test \n <span style="color: black;">\n [ \n <span style="color: #483d8b;">\n 'Label' \n <span style="color: black;">\n ]. \n <span style="color: black;">\n tolist() \n <span style="color: #66cc66;">\n , y_pred \n <span style="color: black;">\n ) \n \n <span style="color: #ff7700; font-weight: bold;">\n print \n <span style="color: black;">\n ( \n <span style="color: #483d8b;">\n "Accuracy is --> " \n <span style="color: #66cc66;">\n ,accuracy* \n <span style="color: #ff4500;">\n 100 \n <span style="color: black;">\n ) \n \n </span style="color: black;"> \n </span style="color: #ff4500;"> \n </span style="color: #66cc66;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #ff7700; font-weight: bold;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #ff7700; font-weight: bold;"> \n </span style="color: black;"> \n </span style="color: #ff7700; font-weight: bold;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #ff7700; font-weight: bold;"> \n </span style="color: black;"> \n </span style="color: #ff7700; font-weight: bold;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #ff7700; font-weight: bold;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: #ff4500;"> \n </span style="color: #66cc66;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #808080; font-style: italic;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: black;"> \n </span style="color: black;"> \n </span style="color: #483d8b;"> \n </span style="color: black;"> \n </span style="color: #66cc66;"> \n </span style="color: #ff7700; font-weight: bold;">\n \n \n \n </span style="color: black;">\n \n \n \n </span style="color: black;">\n \n \n \n </span style="color: #ff7700; font-weight: bold;">\n \n \n \n </span style="color: #808080; font-style: italic;">

Hyperparameters of Bag of Words

In our model of Bag of Words, we have used a Hyperparameter max_features =100 this means we are choosing the top 100 most frequent words from our vocabulary.

Output: –

After fitting our bag of words model and checking on testing data, we have got an accuracy of nearly 90% which is very impressive considering just the BOW model.

Pros and Cons of Bag of Words

Pros: –

1. It is easy to implement and takes very little computation power compared to other Natural Language Processing algorithms.

Cons: –

In our above example, our corpus has only 11 words, but we’ll start facing problems when our corpus size is huge and we have a lot of unique words.

1. In cases where we have a huge corpus, our vector length will be very long and will contain most of the 0’s, thereby resulting in a sparse matrix.

2. We are not retaining any information related to the placement of words in sentences.

a. Examples: –

i. Say we have two sentences in our corpus: –

S1 = Cat killed a mouse.

S2 = Mouse killed a Cat.

Now in our corpus, we have 4 words = [ “Mouse” , “killed” , “a”, “cat” ]

And our BoW will look like this

S1 = [ 1, 1, 1, 1 ]

S2 = [ 1, 1, 1, 1 ]

But as we know after reading 2 sentences from our corpus, we can say that they are 2 completely different sentences but just because we have used BoW our sentences after vectorisation has lost meaning.

This is one of the major drawbacks of the BoW algorithm

3. The BOW model captures the occurrence of words in a sentence but fails to understand the semantics of a text.

a. Example: –

i. “He lives near riverbank”

ii. “He has a bank account”

When we will create a BOW model out of it we will see the word “bank” got the same number in both sentences but actually the meaning of the word “bank” is completely different in both sentences. So, it tells BOW fails to capture any semantic meaning of a sentence

Next Steps

In our next blog, we’ll discuss what other algorithms we can use to tackle the drawbacks of BoW algorithms and we’ll learn about TF-IDF.

Contributed By: Sahib Singh

This is a collection of insightful articles from domain experts in the fields of Cloud Computing, DevOps, AWS, Data Science, Machine Learning, AI, and Natural Language Processing. The range of topics caters to upski... Read Full Bio