Difference Between Bagging and Boosting

Bagging and boosting are different ensemble techniques that use multiple models to reduce error and optimize the model. The bagging technique combines multiple models trained on different subsets of data, whereas boosting trains the model sequentially, focusing on the error made by the previous model. In this article, we will discuss the difference between bagging and boosting.

Bagging and Boosting are advanced ensemble methods in machine learning. The ensemble method is a machine learning technique that combines multiple base models/weak learners to create an optimal predictive model. Machine learning ensemble techniques combine insights from multiple weak learners to drive accurate and improved decision-making.

In this article, we will briefly discuss the difference between Bagging and Boosting.

So let’s start the article.

Table of Content

- Difference Between Boosting and Bagging: Bagging vs Boosting

- What is Bagging?

- What is Boosting?

- Key Difference Between Bagging and Boosting

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

Difference Between Bagging and Boosting: Bagging vs Boosting

| Bagging | Boosting | |

| Basic Concept | Combines multiple models trained on different subsets of data. | Train models sequentially, focusing on the error made by the previous model. |

| Objective | To reduce variance by averaging out individual model error. | Reduces both bias and variance by correcting misclassifications of the previous model. |

| Data Sampling | Use Bootstrap to create subsets of the data. | Re-weights the data based on the error from the previous model, making the next models focus on misclassified instances. |

| Model Weight | Each model serves equal weight in the final decision. | Models are weighted based on accuracy, i.e., better-accuracy models will have a higher weight. |

| Error Handling | Each model has an equal error rate. | It gives more weight to instances with higher error, making subsequent model focus on them. |

| Overfitting | Less prone to overfitting due to average mechanism. | Generally not prone to overfitting, but it can be if the number of the model or the iteration is high. |

| Performance | Improves accuracy by reducing variance. | Achieves higher accuracy by reducing both bias and variance. |

| Common Algorithms | Random Forest | AdaBoost, XGBoost, Gradient Boosting Mechanism |

| Use Cases | Best for high variance, and low bias models. | Effective when the model needs to be adaptive to errors, suitable for both bias and variance errors. |

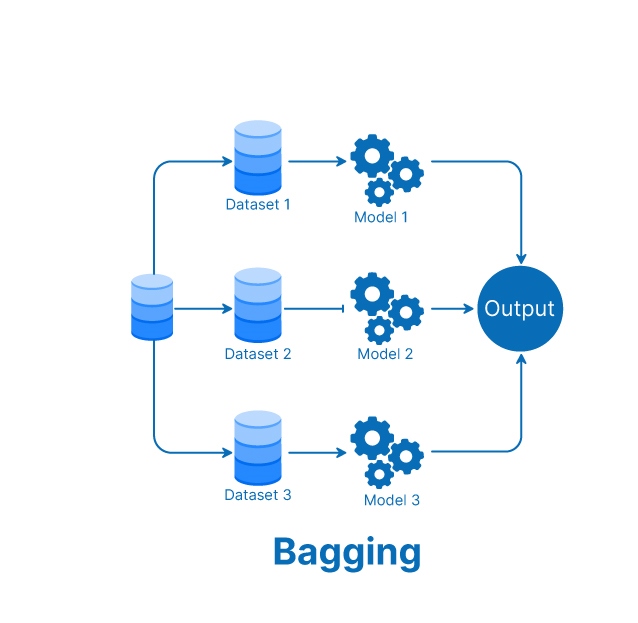

What is Bagging Technique?

Bagging or Bootstrap Aggregating is an ensemble learning method that is used to reduce the error by training homogeneous weak learners on different random samples from the training set, in parallel. The results of these base learners are then combined through voting or averaging approach to produce an ensemble model that is more robust and accurate.

Bagging mainly focuses on obtaining an ensemble model with lower variance than the individual base models composing it. Hence, bagging techniques help avoid the overfitting of the model.

Benefits of Bagging

- Reduce Overfitting

- Improve Accuracy

- Handles Unstable Models

Note: Random Forest Algorithm is one of the most common Bagging Algorithm.

Steps of Bagging Technique

- Randomly select multiple bootstrap samples from the training data with replacement and train a separate model on each sample.

- For classification, combine predictions using majority voting. For regression, average the predictions.

- Assess the ensemble’s performance on test data and use the aggregated models for predictions on new data.

- If needed, retrain the ensemble with new data or integrate new models into the existing ensemble.

Must Check: Bagging Technique in Ensemble Learning

What is Boosting Technique?

Boosting is an ensemble learning method that involves training homogenous weak learners sequentially such that a base model depends on the previously fitted base models. All these base learners are then combined in a very adaptive way to obtain an ensemble model.

In boosting, the ensemble model is the weighted sum of all constituent base learners. There are two meta-algorithms in boosting that differentiate how the base models are aggregated:

- Adaptive Boosting (AdaBoost)

- Gradient Boosting

- XGBoost

Benefits of Boosting Techniques

- High Accuracy

- Adaptive Learning

- Reduces Bias

- Flexibility

How is Boosting Model Trained to Make Predictions

- Samples generated from the training set are assigned the same weight to start with. These samples are used to train a homogeneous weak learner or base model.

- The prediction error for a sample is calculated – the greater the error, the weight of the sample increases. Hence, the sample becomes more important for training the next base model.

- The individual learner is weighted too – does well on its predictions, gets a higher weight assigned to it. So, a model that outputs good predictions will have a higher say in the final decision.

- The weighted data is then passed on to the following base model, and steps 2 and step 3 are repeated until the data is fitted well enough to reduce the error below a certain threshold.

- When new data is fed into the boosting model, it is passed through all individual base models, and each model makes its own weighted prediction.

- Weight of these models is used to generate the final prediction. The predictions are scaled and aggregated to produce a final prediction.

Must Check: Boosting Technique in Ensemble Learning

Key Difference Between Bagging and Boosting

- The bagging technique combines multiple models trained on different subsets of data, whereas boosting trains models sequentially, focusing on the error made by the previous model.

- Bagging is best for high variance and low bias models while boosting is effective when the model must be adaptive to errors, suitable for bias and variance errors.

- Generally, boosting techniques are not prone to overfitting. Still, it can be if the number of models or iterations is high, whereas the Bagging technique is less prone to overfitting.

- Bagging improves accuracy by reducing variance, whereas boosting achieves accuracy by reducing bias and variance.

- Boosting is suitable for bias and variance, while bagging is suitable for high-variance and low-bias models.

Conclusion

In this article, we have briefly discussed how the two ensemble methods bagging and boosting differ from each other.

Bagging reduces errors by training homogeneous weak learners in parallel on different random samples from the training set. The results of these base learners are combined by voting or averaging to create a more robust and accurate ensemble method.

Boosting is an ensemble learning method in which homogeneous weak learners are trained sequentially such that the base model depends on previously fitted base models. Then we combine all these base learners in a highly adaptive way to get an ensemble model.

Hope you will like the article.

Keep Learning!!

Keep Sharing!!

FAQs

What is Ensemble Method in Machine Learning?

The ensemble method is a machine learning technique that combines multiple base models/weak learners to create an optimal predictive model. Machine learning ensemble techniques combine insights from multiple weak learners to drive accurate and improved decision-making.

What is Bagging Technique in Ensemble Learning?

Bagging or Bootstrap Aggregating is an ensemble learning method that is used to reduce the error by training homogeneous weak learners on different random samples from the training set, in parallel. The results of these base learners are then combined through voting or averaging approach to produce an ensemble model that is more robust and accurate.

What is Boosting Technique in Ensemble Learning?

Boosting is an ensemble learning method that involves training homogenous weak learnersu00a0sequentiallyu00a0such that a base model depends on the previously fitted base models. All these base learners are then combined in a very adaptive way to obtain an ensemble model.u00a0

What is the difference between bagging and boosting?

1The bagging technique combines multiple models trained on different subsets of data, whereas boosting trains models sequentially, focusing on the error made by the previous model. 2 Bagging is best for high variance and low bias models while boosting is effective when the model must be adaptive to errors, suitable for bias and variance errors. 3 Generally, boosting techniques are not prone to overfitting. Still, it can be if the number of models or iterations is high, whereas the Bagging technique is less prone to overfitting. 4 Bagging improves accuracy by reducing variance, whereas boosting achieves accuracy by reducing bias and variance. 5 Boosting is suitable for bias and variance, while bagging is suitable for high-variance and low-bias models.