Debunking Common Machine Learning Myths

There is a lot of talk about machine learning these days and talks often propagate myths. In this article, we would like to bust some machine learning myths that are far from reality.

The world is getting smarter every day. There are autonomous cars, smart homes, and robots that serve you at a restaurant in Japan. If this isn’t fiction turning into reality then what is? You might be curious that what makes these technologies so smart! So, machine learning is the common term associated with all these inventions. Let’s explore some of the common myths associated with machine learning.

Content

- Artificial Intelligence and Machine Learning Are Same

- Machine learning is “smarter” than humans

- Machine Learning will replace humans

- You can “just implement machine learning once and forget about it”

- You need more and more data to get results

- Machine learning is a very recent thing

- Conclusion

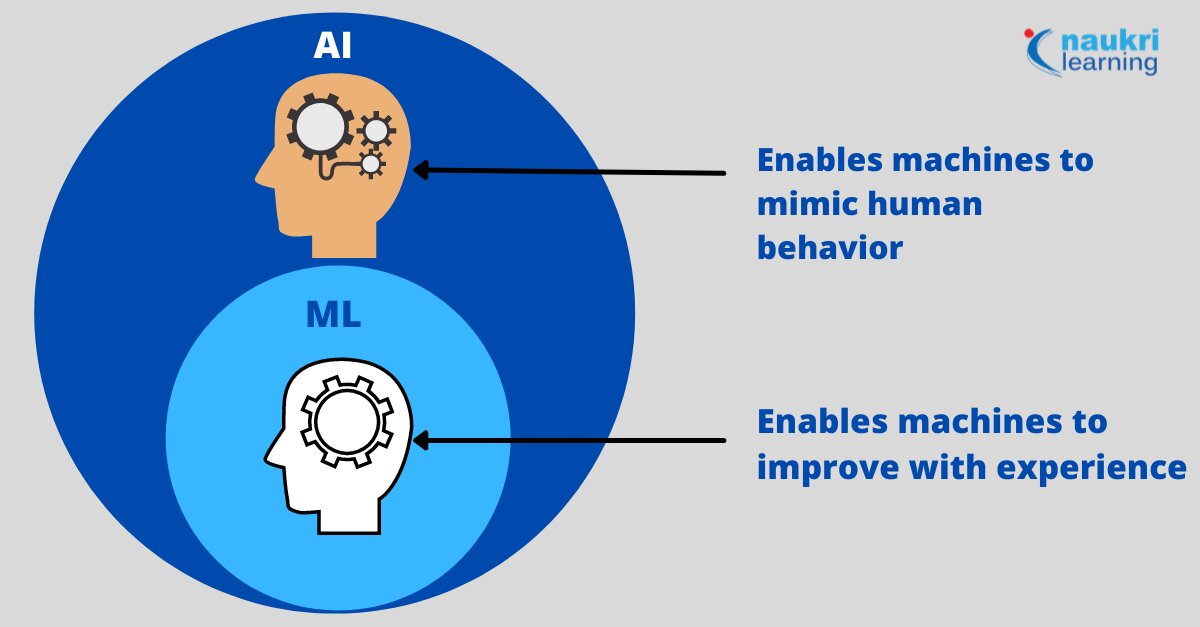

Myth #1: Artificial Intelligence and Machine Learning Are Same

Artificial Intelligence refers to the ability of machines to solve problems and learn. These systems mimic the human cognitive functions, allowing decision-making. Machine learning is the subfield of artificial intelligence. It aims to develop methodologies that allow machines to learn. Machine Learning is the ability to identify complex patterns in data sets. It also builds models using algorithms and predicts future behaviors.

Myth #2: Machine learning is “smarter” than humans

It is not ruled out that it is very likely in the future!

The power of machine learning to find patterns and correlations from the available data is unbeatable. Yet, humans need to intervene to make assessments from the obtained results. Machine learning makes it possible to detect patterns and throw in recommendations.

In complicated tasks like medical diagnosis, machine learning can give encouraging results. The supervision of humans is still required to determine the results and rule out inconsistencies. This brings us to the next very common myth.

Myth #3: Machine Learning will replace humans

This is a popular topic of discussion these days. But the concern that ML and AI-based systems/robots will replace humans is not ambiguous. Robots are automating manual work at many places like factories, production processes, and medicine. But, their implementation is as assistive technology and not as employee replacement. ML has simplified many redundant processes and also improved process management and productivity.

Read more – Myth Busting – Artificial Intelligence Will Take Your Job

Myth #4: You can “just implement machine learning once and forget about it”

Let us take an example of information security here. There is a huge difference between detecting any system issue and detecting faces. Faces are faces today and they remain so tomorrow, nothing changes, except for aging. But new security issues can come up any day. If we talk about the application of ML in cybersecurity, you can’t just install and forget. Everything is changing. Criminals are people with a specific motivation (money, espionage, terrorism, etc.) and their intelligence is not artificial. They can change malware so that smart systems don’t catch them.

Machine learning models are usually constant. But, in information security, you may have to train your ML models from scratch. A security solution that does not update its antivirus base will be useless in long term. Criminals can be dangerously creative when necessary.

Myth #5: You need more and more data to get results

While working on an ML project then it might tempt you to add more data points to the training data. Yet, it is not always a very good idea to dump in more and more data.

If you feed too much data to your model, it will memorize it and there can be the issue of model overfitting. This further leads to high error rates for unseen data. Keep in mind – ‘Garbage In Garbage Out’ principle. You don’t just need to add data but high-quality data that can help in creating better quality ML models.

Myth #6: Machine learning is a very recent thing

It is a myth. Though there is no judging on this one because ML wasn’t very popular back in the 90s.

The earliest ML applications date back to 1952. Arthur Samuel wrote a program that could learn to play checkers. But, this field of computer science was only reserved for the specialized circles.

The main reason was their difficulty in obtaining results in practice. Machine learning requires considerable computing power to achieve useful results. With the latest advances, computer science could achieve this feat in the past 2 decades.

I would also like to mention the availability of actionable data. The availability of usable data was very low in the later 20th century. The current explosion in the availability of usable data has made machine learning a hot technology.

Conclusion

We hope this article helped you debunk some of the machine learning myths. Machine learning is already in the game even when its potential is still untapped. A majority of companies are already using machine learning solutions. Some remarkable growth in machine learning and deep learning is very likely in the future.

Recently completed any professional course/certification from the market? Tell us what liked or disliked in the course for more curated content.

Click here to submit its review with Shiksha Online.

Rashmi is a postgraduate in Biotechnology with a flair for research-oriented work and has an experience of over 13 years in content creation and social media handling. She has a diversified writing portfolio and aim... Read Full Bio