R-Squared vs. Adjusted R-Squared

Adjusted r squared is similar to r-squared and measures the variation in the target variable. Still, unlike r-squared, it takes only those independent variables with some significance and penalizes you for adding features that are not significant for predicting the dependent variable. In this article we will briefly discuss the difference between r-squared and adjusted r-squared with the help of examples.

When we implement a linear regression algorithm to create any model, we use r-squared to know how well the linear regression model fits the data. The previous article briefly discusses how to calculate R Squared in Linear Regression. But the R Squared has its limitations.

In the case of multivariate linear regression, if you keep adding different variables, the value of the r-square will either remain the same or increase, irrespective of the significance of the variable.

So, how to deal with this?

Here, adjusted R-Squared comes into the picture. Adjusted r-squared calculates the R squared from only those variables whose addition in the model is significant. It also penalizes you for adding variables that do not improve the existing model.

So, let’s dive deep to learn more about adjusted r-squared and how it differs from r-squared.

Table of Content

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What is adjusted r squared?

Adjusted r squared is similar to r-squared and measures the variation in the target variable (or dependent variable). Still, unlike r-squared, it takes only those independent variables with some significance and penalizes you for adding features (independent variables) that are not significant for predicting the dependent variable.

It helps to determine whether adding or removing any feature improves the existing model.

| Programming Online Courses and Certification | Python Online Courses and Certifications |

| Data Science Online Courses and Certifications | Machine Learning Online Courses and Certifications |

Adjusted r-squared Formula

The mathematical Formula of adjusted r-squared uses r-square, so let’s check out the Formula of r – squared:

where:

SSR is the sum of squared residuals (i.e., the sum of squared errors)

SST is the total sum of squares (i.e., the sum of squared deviations from the mean)

Now, the mathematical formula adjusted r-squared is:

where

n: number of data points in the dataset

k: number of independent variables

R2: r-squared value of the dataset

Important Result from the Formula of adjusted r-squared

- The value of the adjusted r-squared decreases when the number of independent variables doesn’t significantly increase in the value of the r-squared.

- The value of adjusting r-squared increases/decreases when the number of variables and the value of r-squared increases.

Note

- It is advised to use adjusted r squared while using linear regression for multivariable.

- Adding a non-significant variable in the model increases the difference between r-squared and adjusted r-squared.

- The value of the r-squared can’t be less than zero, but the value of the adjusted r-squared can be negative.

Calculate r-squared and adjusted r-squared

Example -1: Calculate r-square and adjusted r-squared manually.

Let’s take the same dataset we have taken in calculating r-squared.

| xi | yi |

| 11 | 90 |

| 10 | 45 |

| 2 | 19 |

| 8 | 35 |

| 4 | 25 |

| 20 | 80 |

| 1 | 2 |

| 9 | 3 |

| 5 | 33 |

Such that,

SSR = 1681.24 and SST = 6408.89

Now calculating R-squared,

R2 = 1 – (SSR/SST) = 1 – (1681.24 / 6408.89) = 1-0.26233 = 0.73767

Hence, R2 = 0.73767

Now, let’s take we have three independent variables, i.e. k = 3 and here n = 9.

So, adjusted R2 will be

Hence, the value of the adjusted r – squared for the given dataset is 0.584, which is very less than the corresponding r-squared value.

Now, we know how to calculate the adjusted R2 square mathematically, so for the next example we will take a dataset (car model dataset) and check the difference between the values of r-squared and adjusted r-squared at different number of independent variable.

Example -2: Compare the value of r-squared and adjusted r-squared on different number of independent variables.

1. Import the Dataset

#Import libraries

import pandas as pdimport numpy as np

#import datasetmt = pd.read_csv('mtcars.csv')mt.head()

2. Calculate values for one independent variable

#import statsmodel.api to calculate r- square dnd adjacent r-squaredimport statsmodels.api as sm

#take only one features at a time : mpgx_opt = mt.iloc[:, 1:2]y = mt.hp

regressor_OLS = sm.OLS(endog = y, exog = x_opt).fit()regressor_OLS.summary()

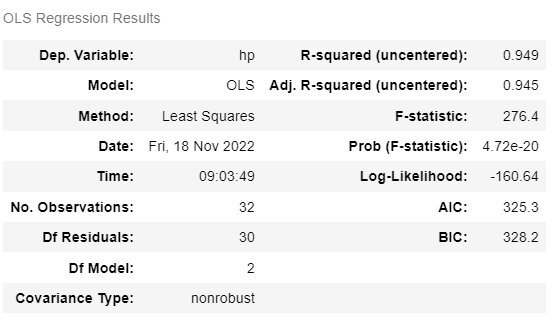

3. Calculate values for two independent variables

#import statsmodel.api to calculate r- square dnd adjacent r-squaredimport statsmodels.api as sm

#take only two features at a time : mpg and cylx_opt = mt.iloc[:, 1:3]y = mt.hp

regressor_OLS = sm.OLS(endog = y, exog = x_opt).fit()regressor_OLS.summary()

4. Calculate values for three independent variables

#import statsmodel.api to calculate r- square dnd adjacent r-squaredimport statsmodels.api as sm

#take only three features at a time : mpg, cyl and dispx_opt = mt.iloc[:, 1:4]y = mt.hp

regressor_OLS = sm.OLS(endog = y, exog = x_opt).fit()regressor_OLS.summary()

Now, combining the results of all three, we will get:

Difference between r-squared and adjusted r-squared

| r-squared | adjusted r-squared | |

| Definition | It measures the proportion of the variation in your dependent variable explained by all of your independent variables in the model. | It measures the proportion of variation explained by only those independent variables that really help in explaining the dependent variable. |

| When to use | Simple Linear Regression. | Single and Multiple Linear Regression both. |

| Value | The value of the r-squared always increases with the addition of independent variables.Value ranges between 0 to 1. | The Value of the adjusted r-squared may increase or decrease depending on the significance of the independent variable.Value of the adjusted r-squared can be negative when the value of the r-squared is very close to zero. |

| Formula |

Conclusion

In this article, we have briefly discussed what is adjusted r-squared, how to calculate adjusted -squared and what’s the difference between r-squared and adjusted r-squared.

Hope, this article will clear your all the doubts related to the r-squared and adjusted r-squared and when to use them.

Adjusted r squared is similar to r-squared and measures the variation in the target variable (or dependent variable). Still, unlike r-squared, it takes only those independent variables with some significance and penalizes you for adding features (independent variables) that are not significant for predicting the dependent variable.

Related Reads

FAQs

What is Adjusted r-squared?

Adjusted r squared is similar to r-squared and measures the variation in the target variable (or dependent variable). Still, unlike r-squared, it takes only those independent variables with some significance and penalizes you for adding features (independent variables) that are not significant for predicting the dependent variable.

What are some of the important result from the formula of adjusted r-squared?

The value of the adjusted r-squared decreases when the number of independent variables doesnu2019t significantly increase in the value of the r-squared. The value of adjusting r-squared increases/decreases when the number of variables and the value of r-squared increases.

What is r-squared?

It measures the proportion of the variation in your dependent variable explained by all of your independent variables in the model.

When to use r-squared and when to use adjusted r-squared?

r-squared is used for linear regression, whereas adjusted r-squared is used for both Simple Linear Regression.

What is the range of values of both r-squared and adjusted r-squared?

The value of the r-squared always increases with the addition of independent variables. Value ranges between 0 to 1. The Value of the adjusted r-squared may increase or decrease depending on the significance of the independent variable. Value of the adjusted r-squared can be negative when the value of the r-squared is very close to zero.