Generative Adversarial Networks: With Real-life Analogy

A well-known machine learning paradigm for approaching generative AI is a generative adversarial network (GAN). This is a beginners guide about Generative Adversarial Networks. This article includes architecture, Applications and its types.

Generative models are a fascinating branch of machine learning that seeks to capture and learn the underlying probability distribution of a given dataset. These models can generate new samples that resemble the training data and have found immense utility in various applications. One of the most prominent and powerful generative models is the Generative Adversarial Network (GAN), which combines the principles of game theory and deep learning to achieve remarkable results.

Table of contents

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What Are Generative Adversarial Networks?

A class of generative models generates the data and then classifies that data.

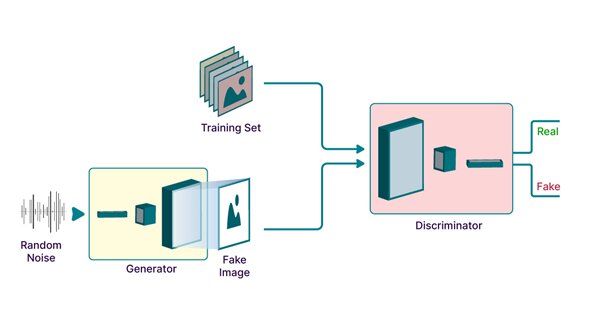

Generative Adversarial Networks consist of two main components: a generator and a discriminator. The Generator takes random noise as input and produces synthetic data samples, while the discriminator tries to distinguish between real and generated data. The Generator and the discriminator are trained simultaneously in a competitive setting, where the Generator aims to fool the discriminator, and the discriminator tries to classify real and generated samples correctly. This adversarial nature of the training process drives the models to improve over time, leading to the generation of increasingly realistic samples.

Generative Adversarial Networks Real-life analogy

The counterfeit currency producer (generator) aims to create fake currency that resembles genuine banknotes, while the counterfeit detection system (discriminator) works to identify and differentiate counterfeit notes from authentic ones. As the producer becomes more skilled at creating counterfeit banknotes that resemble genuine ones, the detection system adapts and enhances its capabilities to spot the emerging patterns and techniques used in counterfeit production.

Similarly, in a GAN, the generator creates synthetic samples to deceive the discriminator, which learns from the features of both real and generated data to enhance its ability to differentiate between genuine and generated samples. The iterative training process in a GAN helps the generator produce more realistic outputs, while the discriminator becomes more proficient at identifying the distinguishing features of real data.

Why Generative Adversarial Networks?

- GANs can generate high-quality, complex samples that capture intricate patterns and dependencies in the data.

- GANs do not rely on explicitly modelling the underlying probability distribution, making them more flexible and adaptable to different data types.

- GANs can learn from unlabeled data, a significant advantage in scenarios where labelled data is scarce or expensive.

Must Explore – Deep Learning Courses

Check out: Artificial Intelligence Courses

Architecture of Generative Adversarial Networks

Generator

The generator network in a Generative Adversarial Network (GAN) is responsible for transforming random noise into synthetic data samples that resemble the real data. Its main objective is to generate high-quality outputs that can deceive the discriminator network. The generator operates by learning patterns and features from the training data and using them to produce realistic samples.

- Purpose:

- Transform random noise into synthetic data samples.

- Generate realistic outputs resembling the real data.

- Architecture:

- First, the generator samples the latent space and associates the latent space with the output.

- Next, create a neural network that goes from the input (the latent space) to the output (the image in most cases).

- We train the generator in adversarial mode by connecting the generator and the discriminator in the model (all generators in the book and her GAN recipe demonstrate these steps).

- Generators can be used for post-training inference.

- Training Process:

- The generator’s performance is evaluated based on the discriminator’s feedback.

- The generated samples are passed to the discriminator along with real data.

- The generator aims to produce samples that the discriminator fails to classify accurately.

- The generator’s performance is evaluated based on the discriminator’s feedback.

- The feedback from the discriminator is used in backpropagation to update the generator’s weights.

- The generator learns to improve its output quality over time by adjusting its parameters.

Discriminator

The discriminator network in a GAN plays the role of a classifier that distinguishes between real data samples and the synthetic samples generated by the generator. It aims to correctly identify the source of the input data, whether it is from the real data distribution or the generator.

- Purpose:

- Classify input samples as real or fake (generated by the generator).

- Provide feedback to the generator network to improve its output quality.

- Architecture:

- First, create a convolutional neural network to classify true or false (binary classification).

- Create a dataset with real data and a fake dataset with a generator

- Train a discriminator model on real and fake data

- Learn how to balance discriminator training with generator training – if the discriminator is too good, the generator will diverge

- Training Process:

- The discriminator is trained on a combination of real and generated samples.

- It learns to accurately classify the samples by capturing the distinguishing features.

- The generator aims to deceive the discriminator by producing samples that are classified as real.

- The discriminator’s parameters are updated based on the errors it makes in classification.

- The iterative training process improves the discriminator’s ability to differentiate between real and generated samples.

Now the question comes to mind how do the Generator generate fake samples?

Generative Adversarial Network (GAN) generate fake samples by transforming random noise or latent vectors into synthetic data that resembles the real data. Output generation involves mapping the latent vectors through a neural network architecture. Random noise or latent vectors as given input, processed through generator layers, applying various transformations and mappings, which progressively refine the input into more complex representations.

In many cases, some noise variables are there in this so-called latent space where the Generator produces samples.

To learn about data science, read our blog on – What is data science?

Steps to Implement Generative Adversarial Networks

1. Generator and Discriminator Architectures

A GAN consists of two main components: the Generator and the discriminator. The Generator is a neural network which takes random noise as input and generates synthetic samples, such as images or text. The discriminator is another neural network that receives both real samples from the dataset and synthetic samples generated by the Generator. Its task is to distinguish between real and fake samples.

2. Model Parameter Initialization

Before training, the Generator and the discriminator parameters are randomly initialized. These parameters are the numerical values determining how the neural networks transform the input data.

3. Generating Synthetic Samples

During training, the Generator generates synthetic samples by taking random noise as input and producing output samples. These samples are initially random and don’t resemble the real data.

4. Training the Discriminator

To train the discriminator, it is fed with a combination of real and generated samples. The discriminator learns to distinguish between real and fake samples by adjusting its parameters based on the input. It tries to classify the real samples as real correctly and the generated samples as fake.

5. Training the Generator

The objective of the Generator is to produce synthetic samples that can fool the discriminator into classifying them as real. The Generator’s parameters are adjusted based on the feedback it receives from the discriminator. By minimizing the discriminator’s ability to differentiate between real and generated samples, the Generator improves its ability to generate more realistic outputs.

6. Iterative Training Process

The training process involves alternating the training of the discriminator and the Generator in multiple iterations. Each iteration consists of feeding real and generated samples to the discriminator, updating its parameters, and then training the Generator to fool the updated discriminator. This iterative process helps both networks improve over time.

7. Convergence

The GAN training process continues until a certain convergence criterion is met. This criterion can be a predefined number of iterations or a measure of the quality of generated samples. Convergence is reached when the Generator produces synthetic samples visually or statistically similar to the real data, making it difficult for the discriminator to distinguish between them.

Must read: Different Types of Neural Networks in Deep Learning

Applications of Generative Adversarial Networks

Generative Adversarial Networks have found numerous applications across various domains:

1. Image generation

GANs can generate realistic images, allowing applications such as photosynthesis, artwork creation, and virtual world generation.

Data augmentation artificially increases the amount of data by generating new data points from existing data. This involves adding small changes to the data or using machine learning models to generate new data points in the latent space of the original data to augment the data set.GANs can generate additional training data, improving the performance of other machine learning models

2. Anomaly detection

An anomaly is a data point or suspicious event that contrasts with the baseline pattern. When data unexpectedly deviates from the established record, it can be an early sign of a system failure, security breach, or newly discovered security vulnerability. GANs can help anomaly detection by identifying anomalous data points by learning normal data distribution and identifying deviations from it.

3. Style transfer

Style transfer is a technique in computer vision and graphics that combine one image’s content with another’s style to generate a new image. Style mapping aims to create an image that inherits another image’s visual style while preserving the original image’s content. GANs can transfer the style of one image to another, enabling applications like image-to-image translation and deepfakes.

Types of Generative Adversarial Networks

- Vanilla GANs: Also known as standard GANs, they comprise a generator and a discriminator network. The Generator generates synthetic samples, while the discriminator distinguishes between real and fake samples. Both networks are trained adversarially to improve their performance.

- Conditional GANs: In conditional GANs, both the Generator and discriminator are conditioned on additional information, such as class labels or attribute vectors. This enables more controlled generation, where the Generator can generate samples based on specific conditions or attributes.

- Deep Convolutional GANs (DCGANs): DCGANs specifically apply convolutional neural networks (CNNs) in the generator and discriminator architectures. These architectures are commonly used for image synthesis tasks and are known for their stable training and high-quality image generation.

- Wasserstein GANs (WGANs): WGANs introduce a different loss function based on the Wasserstein distance or Earth Mover’s distance, providing better stability during training. WGANs aim to address the mode collapse issue and provide more meaningful gradients to guide the Generator’s learning.

- Progressive GANs: Progressive GANs generate high-resolution images by progressively growing both the Generator and discriminator. Starting from a low resolution, the networks are trained, and then additional layers are added to generate higher resolution images. This allows for the generation of realistic and detailed images.

- CycleGANs: CycleGANs are designed for image-to-image translation tasks without paired training data. They consist of two generators and two discriminators, where the generators learn to translate images from one domain to another, and the discriminators aim to distinguish between real and translated images. CycleGANs are useful for tasks like style transfer, object transfiguration, and domain adaptation.

- StarGANs: StarGANs extend conditional GANs to multi-domain image translation. They allow for one Generator to translate images between multiple domains, enabling the conversion of images across different attributes or styles in a single model.

- StackGANs: StackGANs generate images in a two-stage process. The first stage generates a low-resolution image from random noise, while the second stage refines the low-resolution image into a higher resolution using text descriptions as conditioning information. StackGANs are particularly useful for text-to-image synthesis tasks.

- InfoGANs: InfoGANs aim to learn disentangled representations of the input data by maximizing the mutual information between a subset of the Generator’s input and the generated samples. This allows for explicit control over certain attributes of the generated data.

Conclusion

Generative models, particularly Generative Adversarial Networks, have revolutionized the field of machine learning by enabling the generation of new data samples that resemble the training data distribution. Their mathematical intuition lies in capturing and learning the underlying probability distribution of the data. With their ability to generate realistic and diverse samples, GANs have become a powerful tool with various applications across various domains. As research in this area continues to advance, we can expect further innovations and exciting developments in generative models.

This is a collection of insightful articles from domain experts in the fields of Cloud Computing, DevOps, AWS, Data Science, Machine Learning, AI, and Natural Language Processing. The range of topics caters to upski... Read Full Bio