GridSearchCV and RandomizedSearchCV:Python code

Do you want to get good accuracy of your machine learning model? Different methods are there for it. One of the methods is hyperparameter tuning. Many data scientists while doing machine learning implementations deal with hyper-parameter tuning. Now, what does this term mean? Hyperparameters are more like handles available to control the behavior or the output of the algorithm. They are given to algorithms as arguments. The values can be changed and accordingly, the output will change. In this tutorial, we shall introduce GridSearchCV and RandomsearchCV and their implementation in Python.

GridSeachCV–

It is one of the most basic hyper-parameter techniques used. In this, all possible permutations of the hyperparameters for a particular model are used to build models. The performance is evaluated for each model and the best performing one is selected.

RandomizedSearchCV–

In this values for the different hyperparameters are picked up at random from this distribution. Let’s understand its python code. If you want to learn in detail about hyperparameters then u can click here.

About dataset

Problem statement: Diagonizing heart disease.

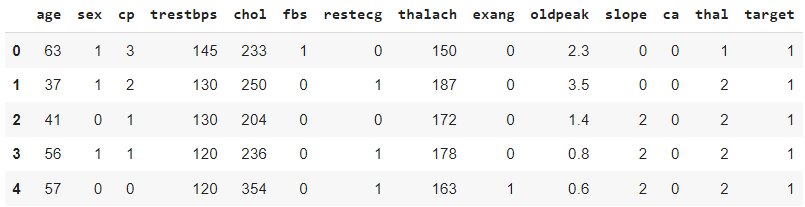

We have imported ‘heart.csv’ which is available free. This data set is available on Kaggle.com.You can download it from there. This dataset contains data for heart disease patients. This dataset includes the following features

- age-age in year

- sex

- cp-chest pain type

- trestbps-resting blood pressure (in mm Hg on admission to the hospital)

- chol-serum cholestoral in mg/dl

- fbs-(fasting blood sugar > 120 mg/dl) (1 = true; 0 = false)

- restecg-resting electrocardiographic results

- thalach-maximum heart rate achieved

- exang-exercise induced angina (1 = yes; 0 = no)

- oldpeak-ST depression induced by exercise relative to rest

- slope: the slope of the peak exercise ST segment — 0: downsloping; 1: flat; 2: upsloping 0: downsloping; 1: flat; 2: upsloping

- ca: The number of major vessels (0–3)

- thal: A blood disorder called thalassemia Value 0: NULL

- target: Heart disease (1 = no, 0= yes)

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

Lets jump to Python code

1. Importing Libraries

import pandas as pdimport numpy as npfrom sklearn.ensemble import RandomForestClassifier

2. Reading the dataset

data = pd.read_csv('heart.csv')data.head()

3. Dependent and independent variables

#Get Target data y = data['target'] #Load X Variables into a Pandas Dataframe with columns X = data.drop(['target'], axis = 1)

4. Splitting dataset into Training and Testing Set

from sklearn.model_selection import train_test_splitX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, random_state=101)

Next, we separate the independent predictor variables and the target variable into x and y. And then split both x and y into training and testing sets with the help of the train_test_split() function.

5. Implementing random forest classifier

rf_Model = RandomForestClassifier()

Note: You can also implement a random forest regressor also.

6. Fitting the model

model = model.fit(X_train,y_train)

7. Checking the accuracy score

print (f'Accuracy - : {model.score(X_test,y_test):.3f}')Output:Accuracy - : 0.820

We get an accuracy of 82% without any hyperparameter tuning. Now let’s check the accuracy with GridSearchCV and RandomSearchCV.

With GridSearchCV

8. Implementing GridSearchCV

from sklearn.model_selection import GridSearchCV

9. Defining the parameters

forest_params = [{'max_depth': list(range(10, 15)), 'max_features': list(range(0,14))}] clf = GridSearchCV(model, forest_params, cv = 10, scoring='accuracy')

- cv(Cross-validation)=10: means ten splits are there.

- max_depth: Max depth of the tree

- max_features: Maximum number of features considered while splitting.

10. Fitting GridSearchCV

best_clf = clf.fit(X_train,y_train)

11. Finding the best parameters

best_clf.best_estimator_ output:RandomForestClassifier(max_depth=12, max_features=1)

Finally, hyperparameter tuning is finding the best combination of hyperparameters that gives the best performance according to the defined scoring metric.

12. Finding accuracy score with GridSearchCV

print (f'Accuracy - : {best_clf.score(X_test,y_test):.3f}')output:Accuracy - : 0.852

With RandomizedSearchCV

13. Implementing RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCVforest_params = [{'max_depth': list(range(10, 25)), 'max_features': list(range(0,15))}]rf_RandomGrid = RandomizedSearchCV(model, forest_params, cv = 10, scoring='accuracy')

14. Fitting RandomSearchCV

rf_RandomGrid.fit(X_train, y_train)

15. Finding the best parameters

best_clf.best_estimator_Output: RandomForestClassifier(max_depth=12, max_features=1)

16. Checking the accuracy using RandomSearchCV

print (f'Test Accuracy - : {rf_RandomGrid.score(X_test,y_test):.3f}') output: Test Accuracy - : 0.856

Summary of this code

The accuracy is almost the same in both cases.RandomSearchCV has slightly better accuracy. Not all cases will end up like this fortunately for me it ended up like this but you’ll see depending on the dimensions and the model you are using.

Performance comparison

We implemented the Random Forest algorithm without hyperparameter tuning and got the lowest accuracy of 82 %. And then we implemented GridSearchCV and RandomSearchCV and checked the accuracy score with both techniques. We got better accuracies You might ask me now which of these searches are better now it depends on what kind of dimensionality data that you’re using so for smaller ones obviously grid search cv is going to be much better-performing but if you have larger data and larger dimensions of data then the best option to go with is the RandomizedSearchCV in comparison to GridSearchCV because it’s doing less number of combinations to get the best outcome.

Endnotes

In this blog, We took a random forest classifier example because this classifier has more features and checked how each hyperparameter works to alter the decision trees. We implemented the python code for GridSearchCV and RandomizedSearchCV. For a conceptual understanding of hyperparameter tuning, you can read my previous blog which covered this topic from a beginner’s point of view.

If you liked my blog consider hitting the stars below.

This is a collection of insightful articles from domain experts in the fields of Cloud Computing, DevOps, AWS, Data Science, Machine Learning, AI, and Natural Language Processing. The range of topics caters to upski... Read Full Bio