Introduction to Maximum Likelihood Estimation: Definition, Type and Calculation

Maximum Likelihood Estimation is used to estimate the parameter value of the likelihood function. This article will briefly discuss the definition, types and calculation of MLE.

Probability distribution uses different sets of parameters that help estimate the probability that a certain event may occur or the variability of the occurrence. Parameters in a probability distribution are the numerical values that describe the characteristics and help to understand the distribution’s size, shape, and other properties. Different distributions have different parameters, such as binomial distributions’ having the number of trials and probability of success as a parameter; the normal distribution has to mean, and variance as parameters, and the Poisson distribution has a mean as a parameter. But now, the problem is how you will find the best value for your parameter; here, estimators come into the picture that acts as a function and gives the approximate value of the parameters. This article will briefly discuss Maximum Likelihood Estimation, its formula, and how to calculate it.

So, let’s dive deep to know more about it.

Table of Content

The fundamental concept behind the Maximum Likelihood Estimation is to select the parameters that make the observed data most likely. MLE is used for the data having n independent and identically distributed samples: X1, X2, X3,……Xn.

But, before getting deeper into MLE, let’s first understand Likelihood.

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What is Likelihood, and how it differs from Probability?

The Likelihood is a process of determining the best data distribution.

- In this, we have to observe the outcome of the sample data and then want to estimate the most likely parameter or probability distribution.

Probability is simply the possibility of something happening.

- Probability or the possibility of an event is based on probability distribution or parameters.

- Here, the parameters are reliable.

Let’s understand with the help of an example.

In India, we are biased towards one game, i.e., Cricket, and in Cricket, most of the time, we want to bat first. But to decide, who will bat first, we have to toss first.

Let the coin is tossed, and one of the captains calls Head and wins the toss.

Now,

Probability (winning captain to choose Bat first) = Probability (winning captain not to choose first) = ½ = 50%.

But the cricket game is not just up to batting; first, it’s always winning a game.

So, the winning captain will choose to bat first or not to bat first will, depend upon certain conditions such as humidity, weather, swing in the pitch, type of pitch, speed of the wind, team strength and weakness, and a lot more. This is what Likelihood is, estimating all the parameter that leads to you winning the game. It may range from 0 to 100%.

| Programming Online Courses and Certification | Python Online Courses and Certifications |

| Data Science Online Courses and Certifications | Machine Learning Online Courses and Certifications |

Types of Likelihood Function

Depending on the type of random variable (discrete or continuous), the likelihood function can be given as:

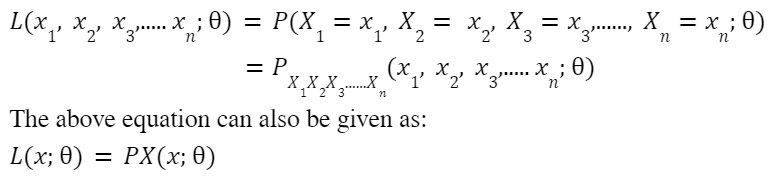

Let X1, X2, X3, …..Xn is a random sample from a distribution with the parameter theta, and let x1, x2, x3, ……, xn are the observed values of X1, X2, X3, …..Xn.

Case – 1: Discrete Random Variable

If Xi are discrete random variable, then the likelihood function is given as:

Case – 2: Continuous Random Variable

If Xi‘s are continuous random variables, then the likelihood function will be given as:

Note:

- For different values of the parameter, the Likelihood will be different, i.e., for the correct parameters, the data will be more probable than if the parameters are incorrect, and for that reason, the Likelihood is written as a Likelihood Function.

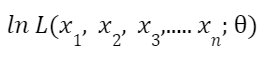

- In some of the cases, we use the log Likelihood Function, which is given as

Till now, we have enough understanding of what Likelihood Function is.

So, now let’s move to our topic, maximum Likelihood Estimation.

What is Maximum Likelihood Estimation

Let X1, X2, X3, ……Xn be a random sample from any distribution with the parameter theta, let x1, x2, x3, ……, xn are the observed values of X1, X2, X3, ……Xn ( X1= x1, X2 = x2, ……Xn = xn), then a maximum likelihood estimate of theta is a value that will maximize the likelihood function (L (X; theta)).

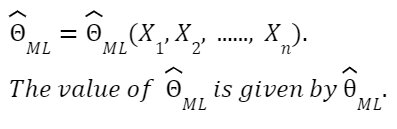

MLE of parameter theta is a random variable that is given by:

Now, let’s take an example to find the value of the Maximum Likelihood Estimator.

Maximum Likelihood Estimation Example

Find the maximum likelihood estimate of theta if Xi is a random variable of the binomial distribution having observations: (x1, x2, x3, x4) = (1, 2, 3, 3).

Answer: Firstly, we will find the likelihood function. Since we have to use the binomial distribution, so the corresponding probability mass function is:

Now, to find the best value of theta that will maximize the Likelihood, we will find the derivative of the likelihood function and will set it equal to zero.

Conclusion

Maximum likelihood function is used to maximize the likelihood function. This article briefly discusses likelihood function, maximum likelihood estimation of the parameter of the probability distribution.

Hope this article helps you to understand all the concepts of MLE.

Top Trending Article

Top Online Python Compiler | How to Check if a Python String is Palindrome | Feature Selection Technique | Conditional Statement in Python | How to Find Armstrong Number in Python | Data Types in Python | How to Find Second Occurrence of Sub-String in Python String | For Loop in Python |Prime Number | Inheritance in Python | Validating Password using Python Regex | Python List |Market Basket Analysis in Python | Python Dictionary | Python While Loop | Python Split Function | Rock Paper Scissor Game in Python | Python String | How to Generate Random Number in Python | Python Program to Check Leap Year | Slicing in Python

Interview Questions

Data Science Interview Questions | Machine Learning Interview Questions | Statistics Interview Question | Coding Interview Questions | SQL Interview Questions | SQL Query Interview Questions | Data Engineering Interview Questions | Data Structure Interview Questions | Database Interview Questions | Data Modeling Interview Questions | Deep Learning Interview Questions |

FAQs

What is the difference between likelihood and probability?

The Likelihood is a process of determining the best data distribution. In this, we have to observe the outcome of the sample data and then want to estimate the most likely parameter or probability distribution. Probability is simply the possibility of something happening. Probability or the possibility of an event is based on probability distribution or parameters. Here, the parameters are reliable.

What is Maximum Likelihood Estimation?

Let X1, X2, X3, Xn be a random sample from any distribution with the parameter theta, let x1, x2, x3, xn are the observed values of X1, X2, X3, Xn ( X1= x1, X2 = x2, Xn = xn), then a maximum likelihood estimate of theta is a value that will maximize the likelihood function (L (X; theta)).

Vikram has a Postgraduate degree in Applied Mathematics, with a keen interest in Data Science and Machine Learning. He has experience of 2+ years in content creation in Mathematics, Statistics, Data Science, and Mac... Read Full Bio