Meet Hume AI: The First AI with Emotional Intelligence

Have you ever wondered if technology could truly understand and respond to human emotions? Hume AI is leading this future with AI that listens with empathy and speaks with care, transforming our interaction with machines. Let's understand more!

Hume, a New York-based research lab and technology company, has introduced what can be called "The First AI with Emotional Intelligence".

If you want to explore Data Science Courses, then click here!

Table of Content

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What is Hume AI?

Hume AI is an AI technology focused on understanding human emotions to improve interactions between humans and machines. It aims to understand and respond to a wide range of emotional states, using these insights to guide in the AI development. By using algorithms in emotional understanding, Hume AI seeks to create more empathetic and interactive technological solutions, enhancing well-being and decision-making processes. Hume AI primarily works on Empathic Voice Interface (EVI) and other related APIs.

This ability allows Hume AI to interact in a way that is considerate of human feelings, aiming to improve communication between humans and technology.

Think of Hume AI as a tech-savvy friend who's really good at reading people's emotions, helping technology understand and react to our feelings as naturally as a person might.

Hume AI is probably "THE HUMAN AI"

How Does Hume AI Work?

As per the official website, here's how Hume AI works:

1. Building AI Models

Hume AI constructs AI models that enable technology to communicate empathetically and learn to enhance people's happiness.

2. Understanding Emotional Expression

- Human communication, whether in-person, text, audio, or video, heavily relies on emotional expression.

- Hume AI's platform captures these emotional signals, allowing technology to attend to human well-being.

3. Empathic Voice Interface (EVI) API

- EVI is Hume's groundbreaking emotionally intelligent voice AI.

- It measures subtle vocal modulations and responds empathetically using an empathic large language model (eLLM).

- Trained on millions of human interactions, eLLM combines language modeling and text-to-speech with enhanced EQ, prosody, end-of-turn detection, interruptibility, and alignment.

4. Expression Measurement API

- Hume's expression measurement models for voice, face, and language are built on over 10 years of research.

- These models capture hundreds of dimensions of human expression in audio, video, and images.

5. Custom Models API

- The Custom Models API utilizes transfer learning from expression measurement models and eLLMs to offer custom insights.

- It can more accurately predict various outcomes, including toxicity, mood, driver drowsiness, or any other metric important to users.

6. Language Model for EVI

- EVI employs Hume's empathic large language model (eLLM) and can integrate responses from an external language model (LLM) API.

- The demo incorporates Claude 3 Haiku.

7. Functionality of eLLM

- Hume's empathic large language model (eLLM) is a multimodal language model considering both expression measures and language.

- It generates a language response and guides text-to-speech (TTS) prosody, enhancing empathetic communication.

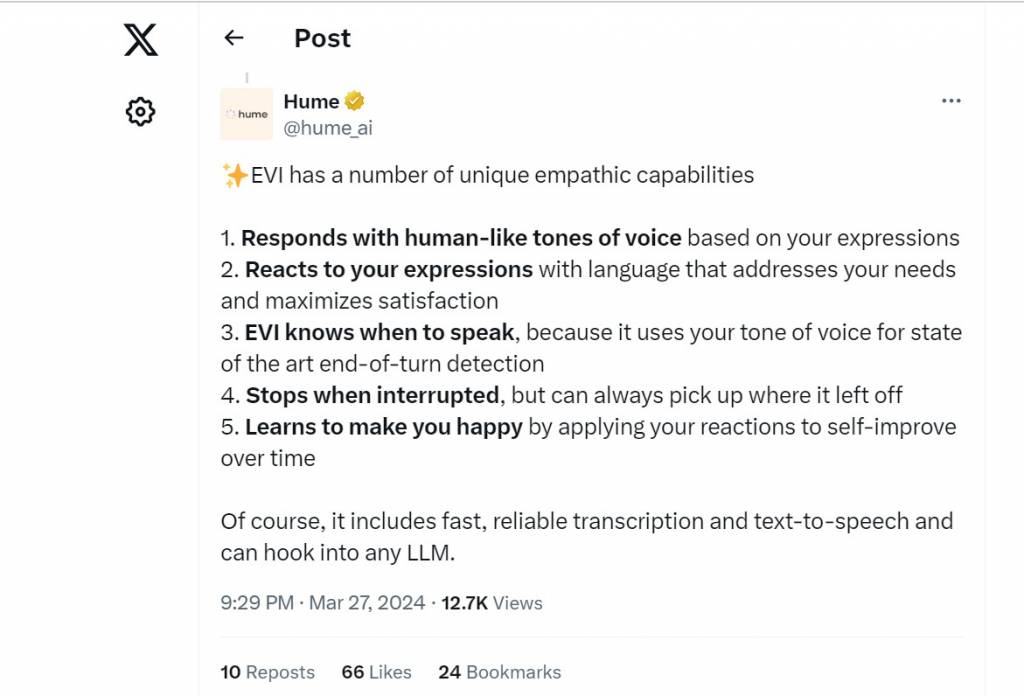

Hume AI's official X handle ( Formerly Twitter) has posted about some of the unique empathic capabilities that EVI has. Take a look:

How to Access Hume AI?

- It has not been fully released yet, but you can do a demo using the website https://demo.hume.ai/

- You can also sign up from its official website https://www.hume.ai/ and use the beta version from there.

- Visit https://dev.hume.ai/intro for any further info that you might need.

A beta version is a pre-release version of software made available to a select group of users to test under real conditions. It's usually more polished than an alpha version but not yet finalized, allowing developers to identify and fix bugs, gather user feedback, and make improvements before the official release.

How Can We Use Hume AI?

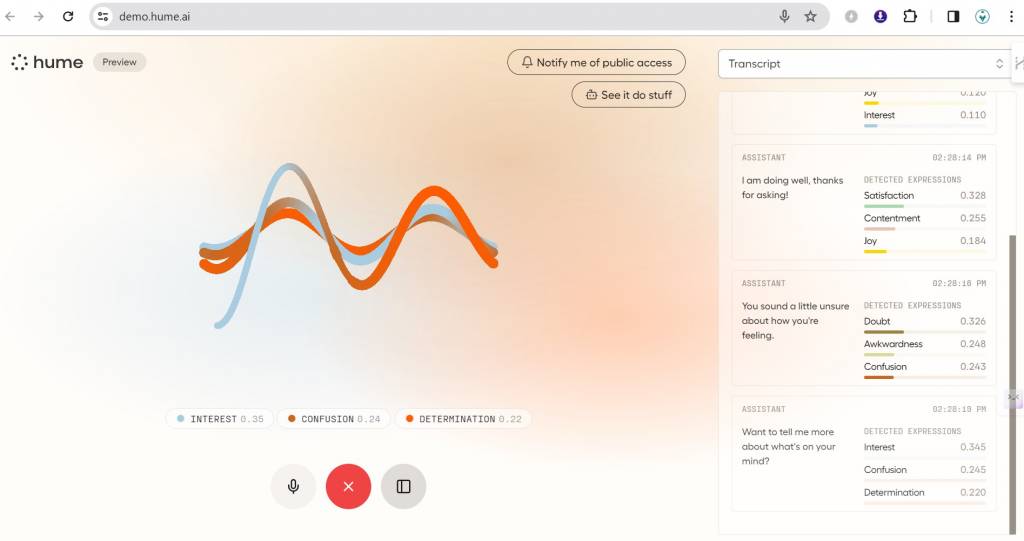

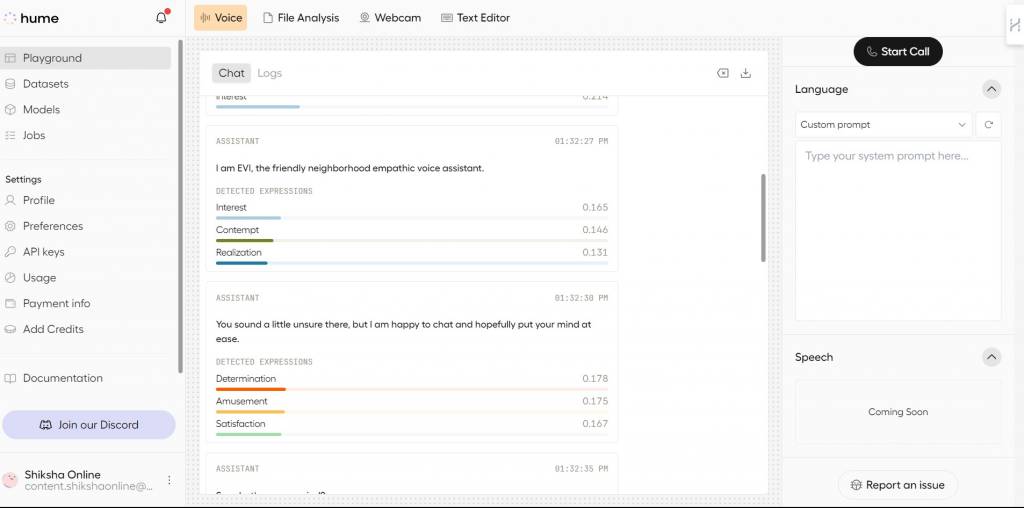

- Firstly, to see the demo, you can go to the website I mentioned above and start talking. You will get responses to all your questions, and it will feel like you are talking to a real human. It also shows the detected expressions, as you can see in the screenshot below.

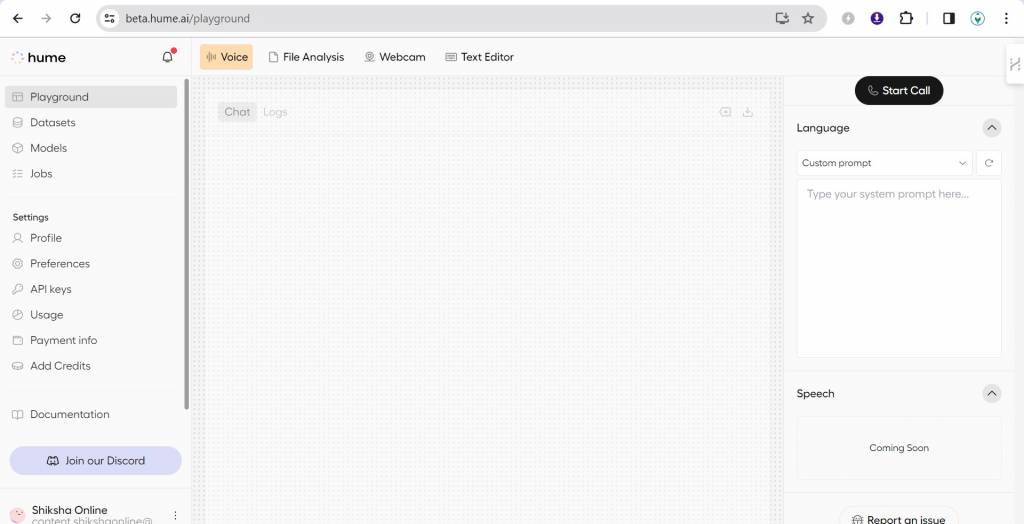

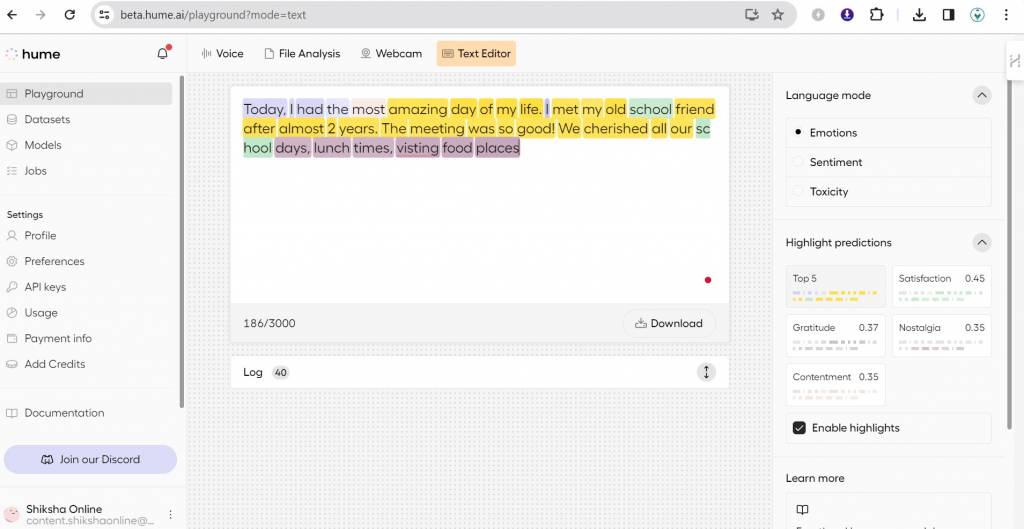

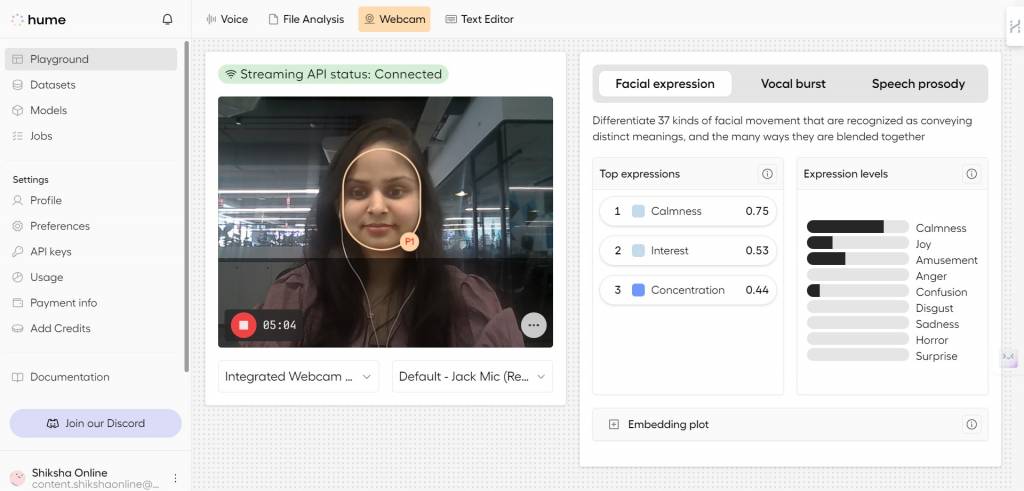

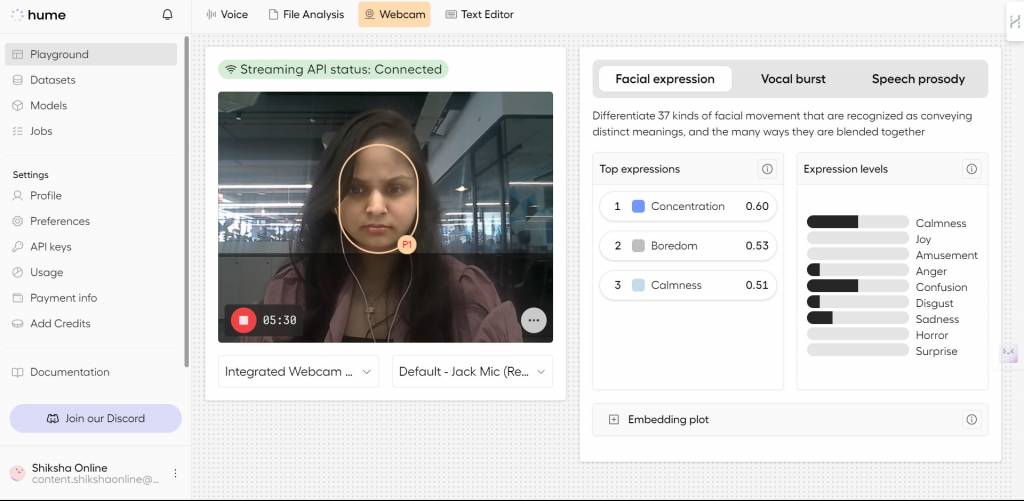

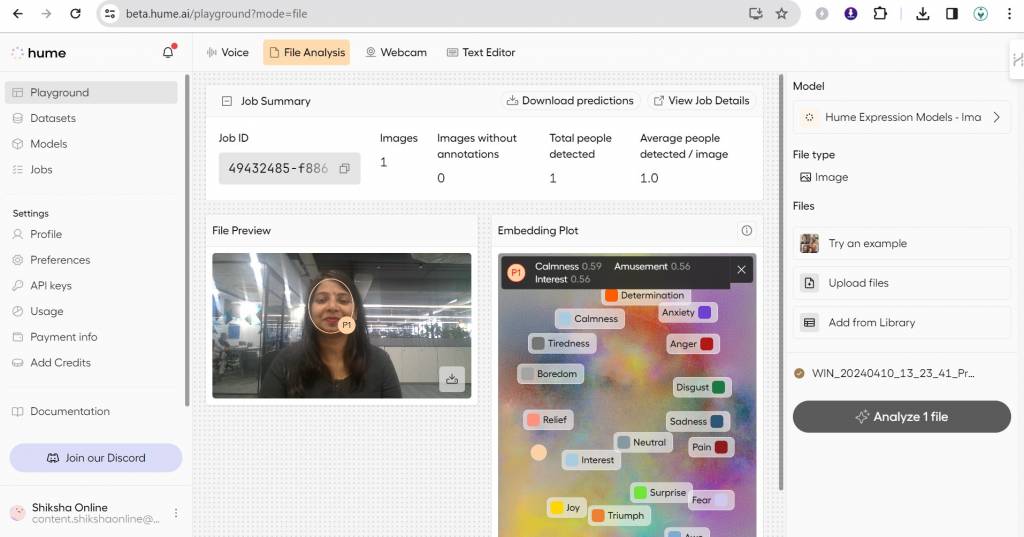

- In the beta version, you get access to Hume's Playground, where you will find four modes: Voice, File Analysis, Webcam, and Text Editor.

We tried all 4 one by one, and here are the results.

1. Text Editor

2. Webcam

Hume AI's Reviews

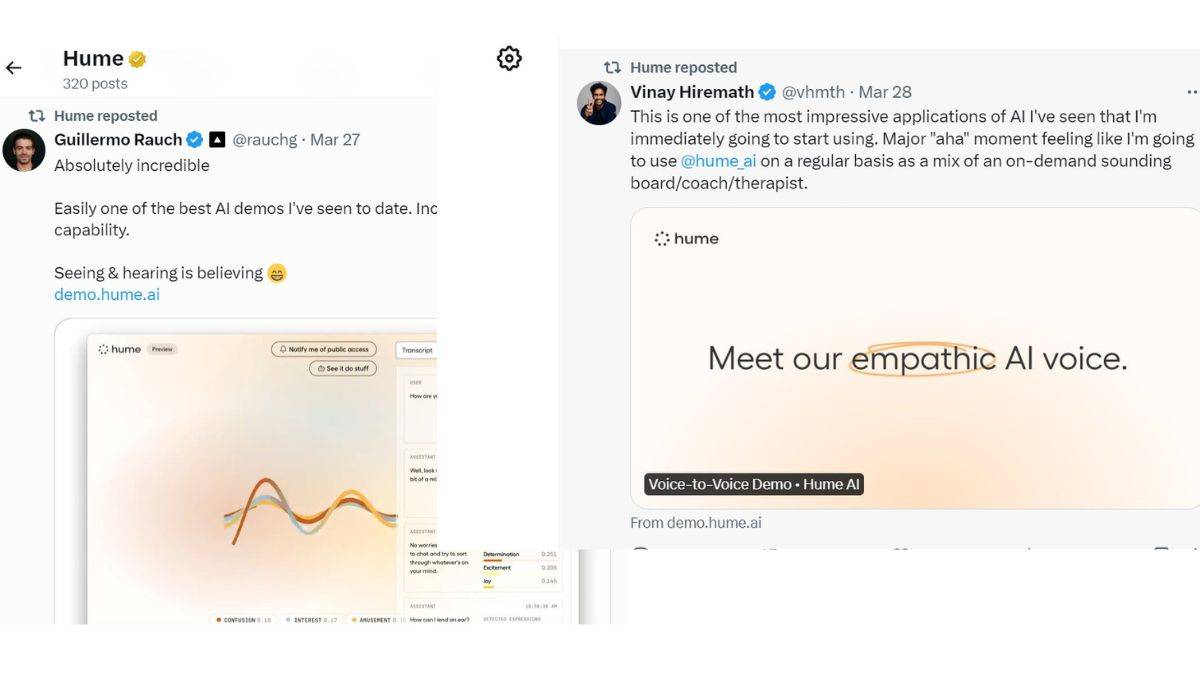

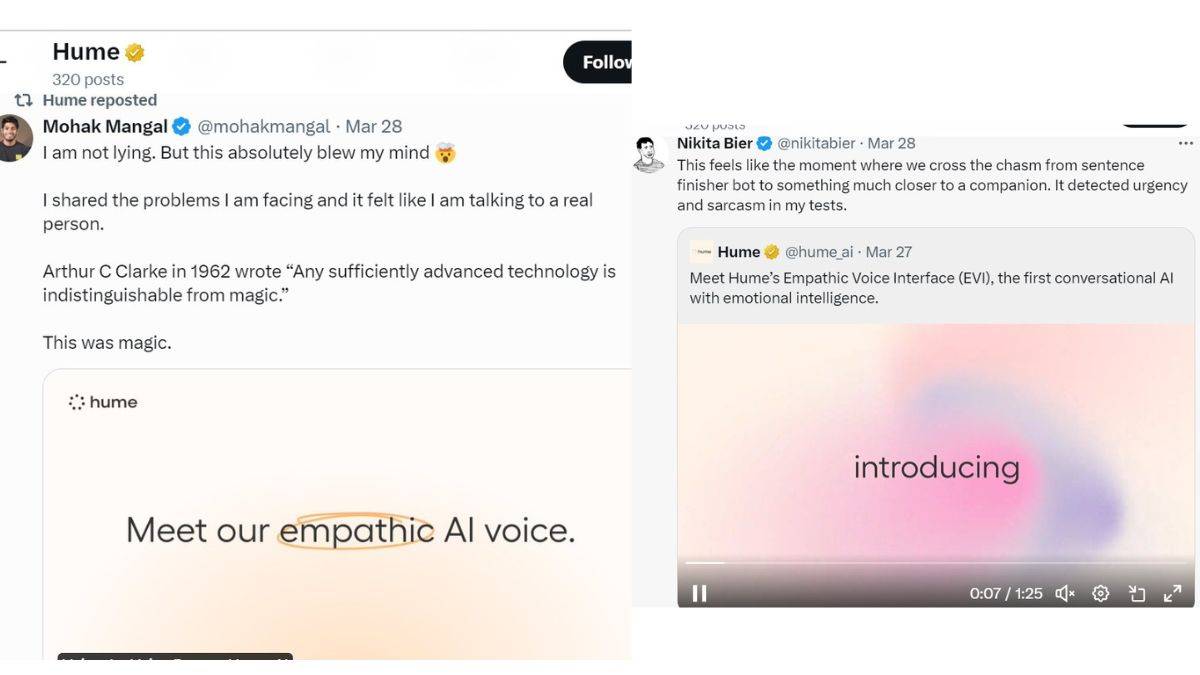

Some of the reviews that we found on X (Formerly Twitter) regarding their user experience using Hume AI are given below.

FAQs on Hume AI

What Are EVI's Expression Labels?

EVI's expression labels serve as indicators of modulations in tone of voice rather than direct emotional experiences. They act as proxies to understand nuances in vocal expressions.

How Customizable Is EVI?

With EVI's general release, a Configuration API will be available, allowing developers to customize various aspects of EVI, including the system prompt, speaking rate, choice of voice, the language model used, and more.

Why Does EVI Measure Prosody at the Sentence Level?

Prosody measurements at the word level can be highly context-dependent and unstable. Internal testing has shown that assessing prosody at the sentence level offers more stability and accuracy.

Where Can I Find Technical Documentation for EVI?

Comprehensive technical documentation for EVI, including API references, tutorials, sample implementations, and SDKs in Python & TypeScript, will be released alongside the general availability of EVI.

What Will Be the Pricing Model for EVI?

EVI's pricing is intended to be competitive with similar services, with detailed pricing information to be announced upon finalization.

How Do EVI's Expression Measures Work?

The expression measures reflect the prosody model's confidence in detecting specific expressions in your tone of voice and language, trained to identify vocal modulations and language patterns that convey certain emotions.

Will EVI Support Multiple Languages?

At its general availability launch in April, EVI will support English, with plans to include additional languages based on user demand and feedback.

Are There Plans to Add More Voices to EVI?

Yes, EVI will launch with an initial set of voices, with ongoing development to expand the voice options available to users.

Why Is EVI Faster Than Other Language Model Services?

EVI's exclusive empathic language model (eLLM) enables it to generate initial responses more quickly than other services, with the capability to integrate responses from other advanced language models for more comprehensive replies.

Does EVI Support Text-to-Speech (TTS)?

Yes, Hume AI has developed its own expressive TTS model, allowing EVI to generate speech with nuanced prosody from text inputs, offering more expressive speech generation than other models.

Hello, world! I'm Esha Gupta, your go-to Technical Content Developer focusing on Java, Data Structures and Algorithms, and Front End Development. Alongside these specialities, I have a zest for immersing myself in v... Read Full Bio