Overfitting in machine learning:Python code example

Overfitting is a situation that happens when a machine learning algorithm becomes too specific to the training data and doesn’t generalize well to new data.This article focus on Overfitting in machine learning with real-life example.It also covers methods For avoiding Overfitting.

If you’ve ever faced a data science interview, you must have asked yourself these questions: What is Overfitting in machine learning?

This is a significant topic from an interview perspective. Or, if you’ve been working with machine learning models, you’ve probably experienced situations where your model shows very high accuracy on training data but poor accuracy on test data.Moreover, it can be very frustrating if we can’t find a solution to this anomalous behavior of the predictive model we’re working on. Let’s fix that.

In this blog, you will learn about the following:

- Overfitting with relatable examples.

- How to avoid Overfitting.

For any algorithm to succeed in real-life situations, it must be able to find a solution in a different set of data and in previous models that were trained on similar data sets. However, a model must be more balanced on training data to be able to generalize its performance on new datasets. Therefore, it’s crucial to ensure that your models train themselves appropriately. Otherwise, your models may underperform or even fail in real-world tasks. Here are some ways you can check if your model is Overfitting.

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

Introduction

We’ve all heard “overfitting” in machine learning, but what does it mean? Overfitting is when a machine learning model begins to learn the specifics of the training data instead of generalizing to new data. This can lead to inaccurate predictions and a loss of performance over time.

Luckily, you can do a few things to avoid Overfitting and ensure that your machine learning models perform well over time. In this article, we’ll discuss what Overfitting is, how to identify it, and some methods for avoiding it.

Also Read: How tech giants are using your data?

Also read:What is machine learning?

Also read :Machine learning courses

What Is Overfitting?

Overfitting is the biggest enemy of machine learning.

Overfitting is a situation that happens when a machine learning algorithm becomes too specific to the training data and doesn’t generalize well to new data.

This can cause many problems, like poor accuracy or even wholly inaccurate predictions. In a nutshell, Overfitting happens when your model starts to “learn” the noise in your data rather than the actual patterns. The noise here means irrelevant or meaningless data like outliers, missing values, and extra features. As a result, your model becomes very specific to that particular data set, and it will only be able to generalize well to new data.

This is a huge problem because if your model is overfitted, it will perform well on the data you used to train it, but it will fail miserably when applied to new data, i.e., test data.

Suppose we have data regarding the price of a house with respect to an area of a house. The points shown in the diagram are data points. As you can see, the line passes through different data points. The data points covered by this line are training data. This clearly shows that the model is overfitted.

Real-life example

Example-1

Suppose a student is preparing for an exam and studying all non-exam-related topics in a book. Then he overburdened himself by learning the things that have no relevance from an exam point of view(noise). And also he memorizes things. Then what will happen? If you ask exactly what he practiced, he will perform well in class, but if you ask some application-based questions in a test where he has to apply his knowledge, then he will need help to perform well. In short, it will perform well on training data rather than test data. In the same way, the machine learning model, if overfitted, will perform poorly on test data but on training data.

Example-2

Suppose we want to find the price of a house.We trained the model and model is giving 99% accuracy score on training data.And then we test the model performance on test data and we get the accuracy score=50%.Why this much difference?The reason is that the model is overfitted and thats why its performing good on training data and bad on testing data.

Also Read:

- What is the difference between AI vs ML vs DL vs DS

- Application of data science in various industries

How Does Overfitting Happen in Machine Learning?

You might be wondering how Overfitting can happen in machine learning. After all, you’re only giving the machine a set of training data, so it should be able to learn from that and not overfit, right?

Unfortunately, it can be more complex. The problem is that the machine can learn too much from the data you give it. And once it starts incorporating random noise into its learning algorithm, it can distort the model. This is what leads to Overfitting.

So how can you avoid this happening? By using a technique called cross-validation. This helps limit the amount of data the machine learning algorithm has access to, reducing the chance of Overfitting.

Why Is Overfitting a Problem?

Say you’re a data scientist. You’ve got a big data set that you want to use to train your machine-learning algorithm. You partition the data set into a training set and a validation set. You feed the training set into your algorithm, and it produces some really impressive results.

But what happens when you test the algorithm on the validation set? It will not perform as well on test data as it did on the training set. Why is that? Because you’ve to overfit your algorithm to the training data set.The model do mistakes while predicting because of numerous reasons. These mistakes are bias and variance.

Bias is the error in calculating the difference between the model’s average prediction and the actual value we are trying to predict.

Variance is the opposite of bias. Variance is also the error that measures the randomness of the predicted values from the actual values.

For more details you can read: Bias and Variance with Real-Life Examples

What Are Some Methods For avoiding Overfitting?

1. Cross-validation

Cross-validation is a robust means of preventing Overfitting. A complete data set is divided into parts. Standard K-fold cross-validation requires the data to be split into k folds. Then iteratively train the algorithm on k-1 folds, using the remaining holdout folds as the test set. This method allows you to tune the hyperparameters of your neural network or machine learning model and test it on completely invisible data.

2. Feature selection

In feature selection each model has some parameters or features, depending on the number of layers, number of neurons, etc. The model may recognize features that are distinguishable from many redundant features or other features, leading to unnecessary complexity. It is well known that the more complex a model is, the more likely it is to overfit.

3. Train with more data

As the training data grows, essential features to extract become apparent. The model can check the relationship between input attributes and output variables. The only assumption of this method is that the data fed to the model is clean. Otherwise, the overfitting problem will be exacerbated.

4. Regularization

If Overfitting occurs in a too complex model, it makes sense to reduce features. Regularization techniques such as Lasso and L1 are useful when you need to know which features to remove from your model. Regularization applies a “penalty” to input parameters with more significant coefficients and subsequently limits the variance of the model.

5. Ensemble techniques

A machine learning technique that combines multiple basic models to create an optimal predictive model. Ensemble learning aggregates predictions to identify the most popular outcomes. Well-known ensemble techniques include bagging and boosting to prevent Overfitting, as the ensemble model is created from the aggregation of multiple models. The inside of the model should be clean. Otherwise, the overfitting problem will be exacerbated.

Also Read:

- Applications of AI and Machine Learning in Education

- How can machine learning help save lives in healthcare sector?

When Should You Worry About Overfitting?

You should worry about Overfitting when your model starts to do worse on test data.

What does this mean? It means that your model is “learning” the wrong things. It must become more specialized and generalize to new/test data well.

In other words, your model is starting to memorize the training data instead of learning the underlying patterns. This can happen when you have too many parameters or when you’re using a complex algorithm.

Overfitting: Important Definitions

1 Bias

Bias measures the difference between the model’s predictions and target values. If the model is oversimplified, the predicted values will stray far from the ground truth, leading to more bias.

2. Variance

Variance is a measure of the disagreement of different predictions across different datasets. When testing the model’s performance on different data sets, the more accurate the predictions, the lower will be variance. Higher variance is a sign of Overfitting, and the model loses its generalization ability.

3. Bias-variance trade-off

Simple linear models are expected to have high bias and low variance due to low model complexity and few trainable parameters. On the other hand, complex nonlinear models tend to observe the opposite behavior. The model strikes an optimal balance between bias and variance in an ideal scenario. Model generalization refers to how well the model is trained to extract useful data patterns and classify unseen data samples.

For more details you can read: Bias and Variance with Real-Life Examples

Also Read: Movie Recommendation System using Machine Learning

Overfitting Python code

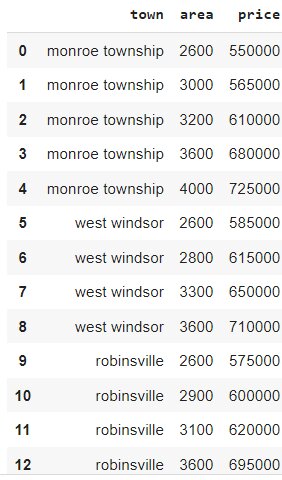

We have taken data set homeprices.csv which is freely available on kaggle.This dataset predict home prices on the basis of town and area.Let’s start implementing it.

1. Importing Libraries and reading dataset

import pandas as pdimport numpy as npimport matplotlib.pyplot as pltimport seaborn as snsdf = pd.read_csv("homeprices.csv")df

2. Handling categorical data

from sklearn.preprocessing import LabelEncoderle=LabelEncoder()df['town']=le.fit_transform(df['town'])df

3. Dependent and independent variables

# Putting feature variable to XX = df.drop('price',axis=1)# Putting response variable to yy = df['price']

4. Splitting dataset into Training and Testing Set

# Splitting the data into train and testfrom sklearn.model_selection import train_test_split# Splitting the data into train and testX_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.7, random_state=42)

5. Implementing a Random forest classifier

#Import Random Forest Modelfrom sklearn.ensemble import RandomForestClassifier#Create a Gaussian Classifierclf=RandomForestClassifier(n_estimators=100)#Train the model using the training sets y_pred=clf.predict(X_test)clf.fit(X_train,y_train)

6. Predicting test cases using random forest

#Predicting the test set resultsPred = clf.predict(X_test)print(Pred)

7. Checking the accuracy score

from sklearn.metrics import classification_reportrand_score=clf.score(X_train, y_train)'''rand_score=classifier.accuracy_score(y_test,Pred)'''classification_report_rf=classification_report(y_test,Pred)print("Accuracy score:",rand_score)

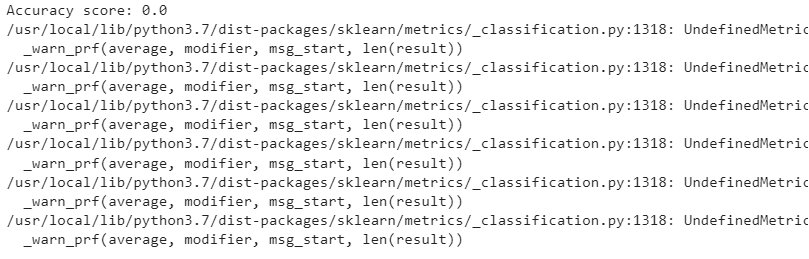

Output:

In case of training data we get Accuracy=1 which means accuracy is 100%.Now lets see check the accuracy score for test data.

from sklearn.metrics import classification_reportrand_score=clf.score(X_test, y_test)'''rand_score=classifier.accuracy_score(y_test,Pred)'''classification_report_rf=classification_report(y_test,Pred)print("Accuracy score:",rand_score)

Output:

When we checked the testing accuracy we get Accuracyscore=0 which means accuracy is 0%.Now you can see the difference between the accuracy score in case of training and testing.This clearly shows that the model is overfitted.

Conclusion

Overfitting can ruin your machine-learning model. It can lead to inaccurate predictions and decreased performance. You can prevent Overfitting by using cross-validation, data pre-processing, and regularization techniques. If you’re looking to create a machine learning model, it’s essential to understand Overfitting and how to prevent it. By using cross-validation, data pre-processing, and regularization techniques, you can avoid Overfitting.

This is a collection of insightful articles from domain experts in the fields of Cloud Computing, DevOps, AWS, Data Science, Machine Learning, AI, and Natural Language Processing. The range of topics caters to upski... Read Full Bio