Regression Analysis in Machine Learning

Introduction

In this article, we will discuss Regression analysis in Machine Learning which is one of the important concepts used in building machine learning models.

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

Table of Content

Regression Analysis:

It is a statistical method to model the relationship between a dependent (target) variable and independent (one or more) variables.

It gives a clear understanding of factors that are affecting the target variable in building machine learning models.

These models are used to predict the continuous data.

Example:

- Predicting Rainfall depends on humidity, temperature, direction and speed of the wind.

- Predicting house price in a certain area, depends on size, locality, distance from school, hospital, metro station, and market, pollution, parks.

Dependent and Independent Variable:

The variable which we want to predict is known as the dependent variable.

And the variables which are used to predict the values of the dependent variable are known as Independent Variable.

In simple terms, independent variables are input values while dependent variables are the output of these inputs (independent) values.

Example: In the above predicting house price example:

Dependent Variable: Price of the house

Independent Variables: size, Locality, the distance between from school, hospital, metro and market, pollution level, number of parks.

Types of Regression

Linear Regression:

In linear regression, we have the linear relationship between the dependent and independent variables.

Example: In house price prediction

As the size of the house increases, the price of houses also increases

Here, the size of the house is an independent variable and the price of a house is a dependent variable.

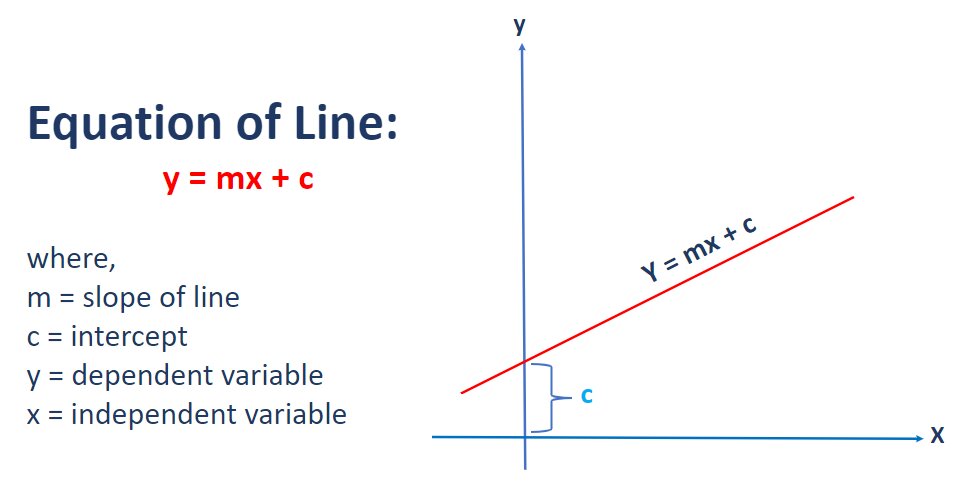

Mathematical Representation of Line:

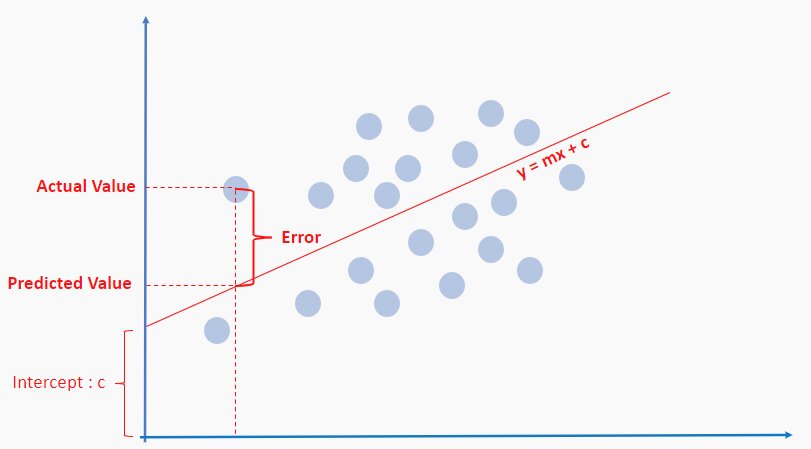

In a linear regression model, we try to find the best fit line (i.e. the best value of m and c) to reduce the error.

Note: Error is the difference between the actual value and the predictive value.

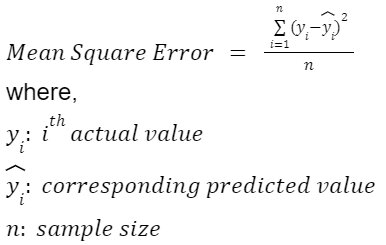

Now, in order to find the best find line, we will use mean square error.

Mean Square Error:

Mean square error is the amount of error in any statistical model

It is the average square difference between actual (observed) value and predicted value.

Note: Lower the value of mean square error, better is the model.

Mathematical Formula:

To know more about Linear regression, read the article Linear Regression in Machine Learning.

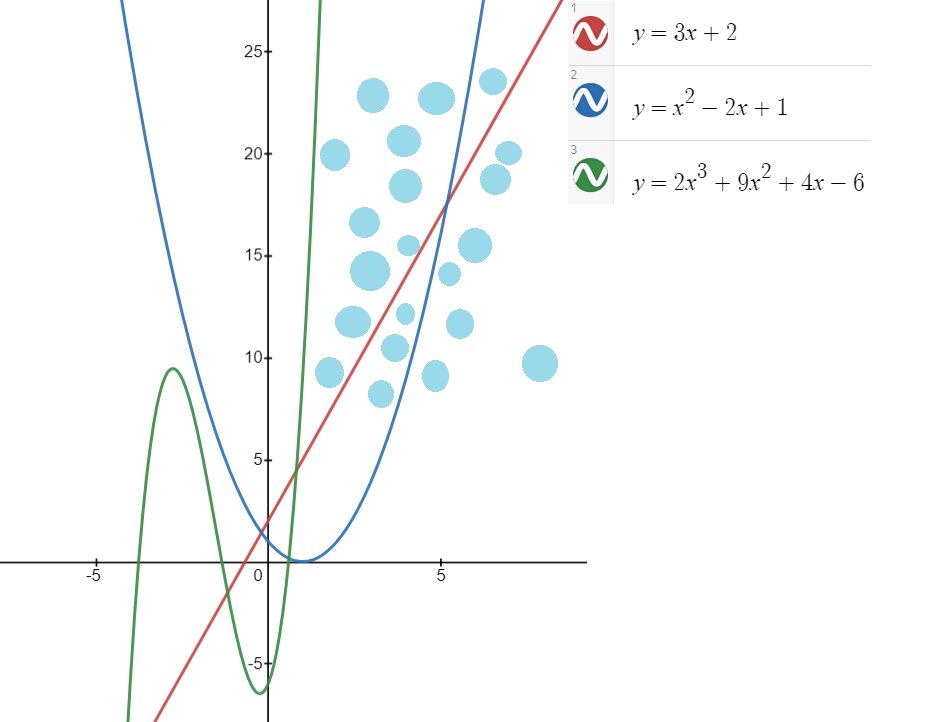

Polynomial Regression:

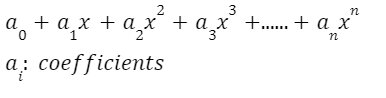

Linear regression works only with the linear data but for the non-linear data (data points are in the form of a curve) we will use polynomial regression.

Polynomial:

A polynomial is a mathematical expression involving the sum of powers in one or more variables multiplied by the coefficients.

Polynomial in one variable with the constant coefficients is given by:

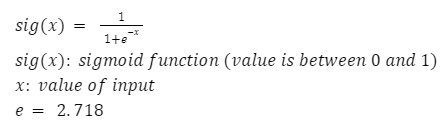

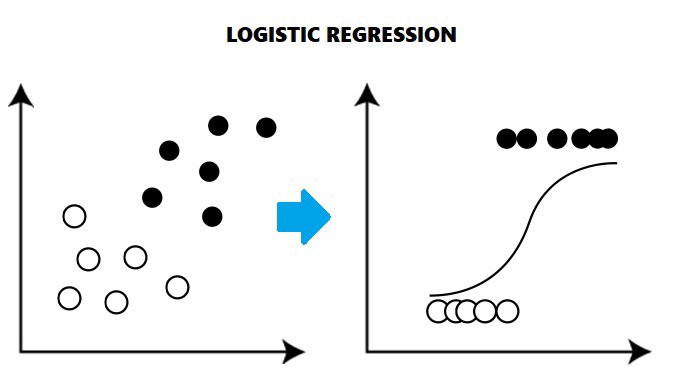

Logistic Regression:

Logistic regression is used when we have to compute the probability of mutually exclusive occurrence such as:

True/False, Pass/Fail, 0/1, Yes/No.

When the dependent variable is discrete, we use logistic regression.

The dependent variable can take only one of two values.

Logistic regression uses the sigmoid function.

To know more about Logistic regression, read the article Linear Regression vs Logistic Regression.

Ridge Regression (L2 Regression):

Ridge Regression is an extension of Linear regression that is used to minimize the loss.

Linear regression term also has an error term:

It is used when the data suffers from the multicollinearity (independent variables are highly correlated).

Ridge solves the problem of multicollinearity through shrinking parameter

Which shrinks the value of coefficient but not to zero.

Lasso Regression (L1 Regression):

Lasso stands for Least Absolute Shrinkage and Selection Operator.

Similar to Ridge, it also penalises the absolute size of the regression coefficients.

Lasso, has the capability to reduce the variability and improve the accuracy of the linear regression model.

If groups of independent variables are highly correlated, it picked one of them and shrink the remaining to zero.

Conclusion:

In this article, we have discussed the regression analysis in machine learning and some of its types like

Linear, Polynomial, Logistic, Lasso, Ridge regression.

Hope this article will help in your data science and machine learning journey.

Top Trending Articles:Data Analyst Interview Questions Data Science Interview Questions Machine Learning Applications Big Data vs Machine Learning Data Scientist vs Data Analyst How to Become a Data Analyst Data Science vs. Big Data vs. Data Analytics What is Data Science What is a Data Scientist What is Data Analyst

Vikram has a Postgraduate degree in Applied Mathematics, with a keen interest in Data Science and Machine Learning. He has experience of 2+ years in content creation in Mathematics, Statistics, Data Science, and Mac... Read Full Bio