Ridge Regression vs Lasso Regression

Lasso and ridge regression are techniques to improve model prediction and handle specific data characteristics like multicollinearity and overfitting.

Table of Content

- Difference Between Ridge Regression and Lasso Regression

- What is Regression?

- What is Ridge Regression?

- What is LASSO Regression?

- Key Difference Between Ridge Regression and LASSO Regression

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What is the Difference Between Ridge Regression and Lasso Regression?

| Parameter | Ridge Regression | Lasso Regression |

|---|---|---|

| Regularization Type | L2 regularization: adds a penalty equal to the square of the magnitude of coefficients. | L1 regularization: adds a penalty equal to the absolute value of the magnitude of coefficients. |

| Primary Objective | To shrink the coefficients towards zero to reduce model complexity and multicollinearity. | To shrink some coefficients towards zero for both variable reduction and model simplification. |

| Feature Selection | Does not perform feature selection: all features are included in the model, but their impact is minimized. | Performs feature selection: can completely eliminate some features by setting their coefficients to zero. |

| Coefficient Shrinkage | Coefficients are shrunk towards zero but not exactly to zero. | Coefficients can be shrunk to exactly zero, effectively eliminating some variables. |

| Suitability | Suitable in situations where all features are relevant, and there is multicollinearity. | Suitable when the number of predictors is high and there is a need to identify the most significant features. |

| Bias and Variance | Introduces bias but reduces variance. | Introduces bias but reduces variance, potentially more than Ridge due to feature elimination. |

| Interpretability | Less interpretable in the presence of many features as none are eliminated. | More interpretable due to feature elimination, focusing on significant predictors only. |

| Sensitivity to | Gradual change in coefficients as the penalty parameter changes. | Sharp thresholding effect where coefficients can abruptly become zero as changes. |

| Model Complexity | Generally results in a more complex model compared to Lasso. | This leads to a simpler model, especially when irrelevant features are abundant. |

Check out the video to get more details of lasso and ridge regression.

Before getting into what ridge and lasso regression are, let's first discuss what regression analysis is.

Regression

Regression analysis is a predictive modelling technique that assesses the relationship between a dependent (i.e., the goal/target variable) and independent factors. Using regression analysis, forecasting, time series modelling, determining the relationship between variables, and predicting continuous values can all be done.

To give you an Analogy, Regression is the best way to study the relationship between household areas and a driver’s household electricity cost.

Now, These Regression falls under 2 Categories, Namely,

- Simple Linear Regression: The prediction output of the dependent variable Y is created using only one independent variable in simple linear regression

- Equation: Y = mX + c

- Multiple Linear Regression: To construct a prediction outcome, multiple linear regression employs two or more independent variables

- Equation: Y = m1X1 + m2X2 + m3X3 + …. + c

Where,

Y = Dependent Variable

m = Slope

X = Independent Variable

c = Intercept

Now, let's discuss what ridge and lasso regression are.

What is Ridge Regression?

Ridge regression addresses multicollinearity and overfitting by adding a penalty equivalent to the square of the magnitude of coefficients to the loss function. This method minimizes the sum of squared residuals (RSS) plus a term λ∑βj2, where λ is a complexity parameter. The penalty term reduces the model complexity and coefficient size, thus preventing overfitting.

It is also known as L2 Regularization.

Mathematical Function of Ridge Regression

- Lambda (λ) in the equation is a tuning parameter (also referred to as regularization parameter)selected using a cross-validation technique that makes the fit small by making squares small (β2) by adding a shrinkage factor.

- A higher λ value means a greater shrinkage effect on the coefficients. When λ=0, Ridge regression reverts to ordinary least squares regression.

- The shrinkage factor is lambda times the sum of squares of regression coefficients (The last element in the above equation).

It's important to note that Ridge regression never sets the coefficients to zero, so it doesn't perform feature selection.

When to Use Ridge Regression?

Suitable when dealing with multicollinearity or when all features are relevant and you cannot afford to exclude any predictors.

Let's take two real-life scenarios to get a better understanding.

Scenario: Multicollinearity in Data

Consider you are developing a model to predict real estate prices, such as location, property size, number of rooms, age of the building, proximity to amenities, etc.

For the above model, parameters like size and number of rooms are correlated. So, in this case, ridge regression will be suitable due to its ability to handle multicollinearity. As it will shrink the coefficients of correlated variables without completely eliminating any variable. Ridge regression will maintain the collective influence of all features (although in a reduced magnitude), which is important although it in a reduced magnitude.

Scenario: Need for a Comprehensive Model

Let's say you work on a medical research project where excluding any predictor could mean missing important information. The dataset includes a wide range of variables, all of which are potentially relevant.

For this scenario, ridge regression is advantageous as it includes all predictors while mitigating the risk of overfitting through coefficient shrinkage.

What is LASSO Regression?

Lasso (Least Absolute Shrinkage and Selection Operator) regression, similar to Ridge, adds a penalty to the loss function. However, Lasso's penalty is the absolute value of the magnitude of coefficients: λ∑∣βj∣. This technique helps reduce overfitting and sets some coefficients to zero, thus performing automatic feature selection. This property makes Lasso particularly useful when we have many features, as it can identify and discard irrelevant ones.

It is also known as L1 Regularization.

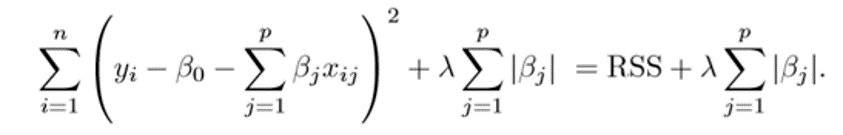

Mathematical Function of LASSO Regression

The above equation represents the formula for Lasso Regression! where Lambda (λ) is a tuning parameter selected using the before Cross-validation technique.

Unlike Ridge Regression, Lasso uses |β| to penalize the high coefficients.

The shrinkage factor is lambda times the sum of Regression coefficients (The last factor in the above equation).

The LASSO Regression is well suited for:

- Predictive Modelling with High Dimensional Data

- Predicting Accuracy vs Interpretability.

Key Difference Between Ridge Regression and LASSO Regression

- Feature Selection: Lasso can set coefficients to zero, effectively performing feature selection, while Ridge can only shrink coefficients close to zero.

- Bias-Variance Tradeoff: Both methods introduce bias into estimates but reduce variance, potentially leading to better overall model predictions.

- Regularization Technique: Ridge uses L2 regularization (squares of coefficients), and Lasso uses L1 regularization (absolute values of coefficients).

- Predictive Performance: The choice between Ridge and Lasso depends on the data and the problem. Ridge tends to perform better for many significant predictors, while Lasso is more effective when only a few predictors are actually significant.

Conclusion

In this article, we discussed Ridge Regression vs Lasso Regression in detail with an example of how these models improve accuracy. Hope you will like the article.

Keep Learning!!

Keep Sharing!!