The Ultimate Showdown: RNN vs LSTM vs GRU – Which is the Best?

Recurrent neural networks (RNNs) are a type of neural network that are used for processing sequential data, such as text, audio, or time series data. They are designed to remember or “store” information from previous inputs. It allows them to make use of context and dependencies between time steps. This makes them useful for tasks […]

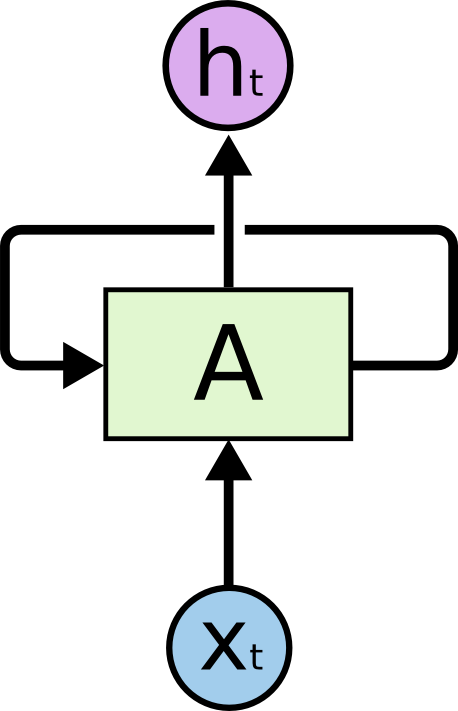

Recurrent neural networks (RNNs) are a type of neural network that are used for processing sequential data, such as text, audio, or time series data. They are designed to remember or “store” information from previous inputs. It allows them to make use of context and dependencies between time steps. This makes them useful for tasks such as language translation, speech recognition, and time series forecasting.

There are several different types of RNNs, including long short-term memory (LSTM) networks and gated recurrent units (GRUs). Both LSTMs and GRUs are designed to address the problem of “vanishing gradients” in RNNs, which occurs when the gradients of the weights in the network become very small and the network has difficulty learning.

LSTM networks are a type of RNN that use a special type of memory cell to store and output information. These memory cells are designed to remember information for long periods of time, and they do this by using a set of “gates” that control the flow of information into and out of the cell. The gates in an LSTM network are controlled by sigmoid activation functions, which output values between 0 and 1. The gates allow the network to selectively store or forget information, depending on the values of the inputs and the previous state of the cell.

GRUs, on the other hand, are a simplified version of LSTMs. They use a single “update gate” to control the flow of information into the memory cell, rather than the three gates used in LSTMs. This makes GRUs easier to train and faster to run than LSTMs, but they may not be as effective at storing and accessing long-term dependencies.

Both LSTMs and GRUs are in a wide range of tasks, including language translation, speech recognition, and time series forecasting. In general, LSTMs tend to be more effective at tasks that require the network to store and access long-term dependencies. Ob the other-hand GRUs are more effective at tasks that require the network to learn quickly and adapt to new inputs.

There is no one “best” type of RNN for all tasks, and the choice between LSTMs and GRUs (or even other types of RNNs) will depend on the specific requirements of the task at hand. In general, it is a good idea to try both LSTMs and GRUs (and possibly other types of RNNs) and see which one performs better on your specific task.

Difference Between RNN vs GRU vs LSTM

Here is a comparison of the key differences between RNNs, LSTMs, and GRUs:

| Parameters | RNNs | LSTMs | GRUs |

|---|---|---|---|

| Structure | Simple | More complex | Simpler than LSTM |

| Training | Can be difficult | Can be more difficult | Easier than LSTM |

| Performance | Good for simple tasks | Good for complex tasks | Can be intermediate between simple and complex tasks |

| Hidden state | Single | Multiple (memory cell) | Single |

| Gates | None | Input, output, forget | Update, reset |

| Ability to retain long-term dependencies | Limited | Strong | Intermediate between RNNs and LSTMs |

Best-suited NLP and Text Mining courses for you

Learn NLP and Text Mining with these high-rated online courses

How does RNN work?

Here is a brief summary of how RNNs work:

- RNNs are neural networks that can process sequential data, such as text, audio, or time series data.

- They contain a “hidden state” that is passed from one element in the sequence to the next, allowing the network to remember information from previous elements.

- At each time step, the RNN takes in an input and the current hidden state, and produces an output and a new hidden state.

- The output and new hidden state are used as input for the next time step, and this process continues until the entire sequence has been processed.

- RNNs are well-suited for tasks involving variable-length sequences and maintaining state across elements.

How does LSTM work?

Here is a brief summary of how Long Short-Term Memory (LSTM) networks work:

- LSTMs are a type of Recurrent Neural Network (RNN) that can better retain long-term dependencies in the data.

- They have a more complex structure than regular RNNs, consisting of input, output, and forget gates that can selectively retain or discard information from the hidden state.

- The input gate determines which information from the current input to store in the hidden state.

- The forget gate determines which information from the previous hidden state to keep or discard.

- The output gate determines which information from the hidden state to output as the final prediction.

- This combination of gates allows LSTMs to retain important information from long sequences and discard irrelevant or outdated information.

- LSTMs are often used for tasks involving long-term dependencies, such as language translation and language modeling.

How does GRU work?

Here is a brief summary of how Gated Recurrent Units (GRUs) work:

- GRUs are a type of Recurrent Neural Network (RNN) that uses a simpler structure than LSTMs and is easier to train.

- They have two gates: an update gate and a reset gate.

- The update gate determines which information from the previous hidden state and current input to keep, and the reset gate determines which information to discard.

- The final hidden state is a combination of the information retained by the update gate and the current input.

- This combination of gates allows GRUs to retain relevant information from long sequences and discard irrelevant or outdated information.

- GRUs are often used for tasks involving sequential data, such as language translation and language modeling.

Summarizing the Difference Between RNN vs LSTM vs GRU

- RNNs are a type of neural network that are designed to process sequential data, such as text, audio, or time series data. They can “remember” or store information from previous inputs, which allows them to use context and dependencies between time steps.

- LSTMs are a type of RNN that use special type of memory cell and gates to store and output information. The gates in an LSTM network are controlled by sigmoid activation functions. These gates allow the network to selectively store or forget information. LSTMs are effective at storing and accessing long-term dependencies. They are slower to train and run than other types of RNNs.

- GRUs are simplified version of LSTMs that use single “update gate” to control the flow of information into the memory cell. GRUs are easier to train and faster to run than LSTMs, but they may not be as effective at storing and accessing long-term dependencies.

- There is no one “best” type of RNN for all tasks, and the choice between LSTMs, GRUs, and other types of RNNs will depend on the specific requirements of the task at hand. It is often a good idea to try multiple types of RNNs and see which one performs best on your specific task.

Conclusion

In conclusion, the key difference between RNNs, LSTMs, and GRUs is the way that they handle memory and dependencies between time steps. RNNs, LSTMs, and GRUs are types of neural networks that process sequential data. RNNs remember information from previous inputs but may struggle with long-term dependencies. LSTMs effectively store and access long-term dependencies using a special type of memory cell and gates. GRUs, a simplified version of LSTMs, use a single “update gate” and are easier to train and run, but may not handle long-term dependencies as well. The best type of RNN depends on the task at hand. It is often useful to try multiple types and see which performs best.

Experienced AI and Machine Learning content creator with a passion for using data to solve real-world challenges. I specialize in Python, SQL, NLP, and Data Visualization. My goal is to make data science engaging an... Read Full Bio