Top Machine Learning and Data Science Tools for Non-Programmers

Data science has emerged as an advantageous career option for those interested in extracting, manipulating, and generating insights from enormous data volumes. There is a massive demand for data scientists across industries, which has pulled many non-IT professionals and non-programmers to this field. If you are interested in becoming a data scientist without being a coding ninja, get your hands on data science tools.

You don’t require any programming or coding skills to work with these tools. These data science tools offer a constructive way to define the entire Data Science workflow and implement it without any coding bugs or errors.

To learn more about data science, read our blog – What is data science?

You may also be interested in exploring:

Top Data Science Tools

Listed below are some of the vital data science tools that every non-programmer must have a good command of before trying their luck in the field of data science.

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

RapidMiner

RapidMiner is a data science tool that offers an integrated environment for various technological processes. This includes machine learning, deep learning, data preparation, predictive analytics, and data mining.

It allows you to clean up your data and then run it through a wide range of statistical algorithms. Suppose you want to use machine learning instead of traditional data science. In that case, the Auto Model will choose from several classification algorithms and search through various parameters until it finds the best fit. The goal of the tool is to produce hundreds of models and then identify the best one.

Once the models are created, the tool can implement them while testing their success rate and explaining how it makes its decisions. Sensitivity to different data fields can be tested and adjusted with the visual workflow editor.

Recent enhancements in RapidMiner include better text analytics, a greater variety of charts for building visual dashboards, and sophisticated algorithms to analyze time-series data.

RapidMiner is used widely in banking, manufacturing, oil & gas, automotive, life sciences, telecommunication, retail, and insurance. Some of the most popular RapidMiner products are –

Studio – Comprehensive data science platform with visual workflow design and full automation. Cost – $7,500 – $15,000 per user per year

Server: RapidMiner Server enables computation, deployment, collaboration, thereby enhancing the productivity of analytics teams

Radoop: RapidMiner Radoop eliminates the complexity of data science on Hadoop and Spark

Cloud: It is a cloud-based repository that enables easy sharing of information among different tools

DataRobot

DataRobot caters to data scientists at all levels and serves as a machine learning platform to help them build and deploy accurate predictive models in reduced time. This platform trains and evaluates 1000’s models in R, Python, Spark MLlib, H2O, and other open-source libraries. It uses multiple combinations of algorithms, pre-processing steps, features, transformations, and tuning parameters to deliver the best models for your datasets.

Features

- Accelerates AI use case throughput by increasing the productivity of data scientists and empowering non-data scientists to build, deploy, and maintain AI

- Provides an intuitive AI experience to the user, helping them understand predictions and forecasts

- Can be deployed in a private or hybrid cloud environment using AWS, Microsoft Azure, or Google Cloud Platform

Tableau

Tableau is a top-rated data visualization tool that allows you to break down raw data into a processable and understandable format. It has some brilliant features, including a drag and drop interface. It facilitates tasks like sorting, comparing, and analyzing, efficiently.

Tableau is also compatible with multiple sources, including MS Excel, SQL Server, and cloud-based data repositories, making it a popular data science tool for non-programmers.

Features

- It allows connecting multiple data sources and visualizing massive data sets and finding correlations and patterns

- The Tableau Desktop feature allows getting real-time updates

- Tableau’s cross-database join functionality allows to create calculated fields and join tables, and solve complex data-driven problems

- Tableau leverages visual analytics enabling users to interact with data and thereby helping them to get insights in less time and make critical business decisions in real-time

Minitab

Minitab is a software package used in data analysis. It helps input the statistical data, manipulate that data, identify trends and patterns, and extrapolate answers to the existing problems. It is among the most popular software used by the business of all sizes

Minitab has a wizard to choose the most appropriate statistical tests. It is an intuitive tool.

Features

- Simplifies the data input for statistical analysis

- Manipulates the dataset

- Identifies trends and patterns

- Extrapolates the answers to the existed problem with product/services

Trifacta

Trifacta is regarded as the secret weapon of data scientists. It has an intelligent data preparation platform, powered by machine learning, which accelerates the overall data preparation process by around 90%. Trifacta is a free stand-alone software offering an intuitive GUI for data cleaning and wrangling.

Besides, its visual interface surfaces errors, outliers, or missing data without any additional task.

Trifacta takes data as input and evaluates a summary with multiple statistics by column. For every column, it recommends some transformations automatically.

Features

- Seamless data preparation across any cloud, hybrid, or multi-cloud environment

- Automated visual representations of data in a visual profile

- Intelligently assesses the data to recommend a ranked list of transformations

- Enables to deploy and manage self-service data pipelines in minutes

BigML

BigML eases the process of developing Machine Learning and Data Science models by providing readily available constructs. These constructs help in the classification, regression, and clustering problems. BigML incorporates a wide range of Machine Learning algorithms. It helps build a robust model without much human intervention, which lets you focus on essential tasks such as improving decision-making.

Features

- Offers multiple ways to load raw data, including most Cloud storage systems, public URLs, or your own CSV/ARFF files

- A gallery of well-organized and free datasets and models

- Clustering algorithms and visualization for accurate data analysis

- Anomaly detection

- Flexible pricing

MLbase

MLbase is an open-source tool used for creating large-scale Machine Learning projects. It addresses the problems faced while hosting complex models that require high-level computations.

MLBase consists of three main components:

ML Optimizer – It automates the Machine Learning pipeline construction

MLI – An experimental API focused on feature extraction and algorithm development for high-level ML programming abstractions

MLlib – ML library of Apache Spark

Features

- A simple GUI for developing Machine Learning models

- Tests the data on different learning algorithms to detect the best accuracy

- Simple and easy to use, for both programmers and non-programmers

- Efficiently scales large, convoluted projects

Google Cloud AutoML

Google Cloud AutoML is a platform to train high-quality custom machine learning models with minimal effort and limited machine learning expertise. It allows building predictive models that can out-perform all traditional computational models.

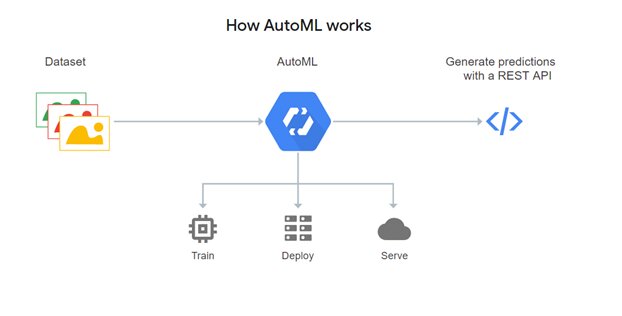

Fig – How Google AutoML works?

Features

- Uses simple GUI to train, evaluate, improve, and deploy models based on the available data

- Generates high-quality training data

- Automatically builds and deploys state-of-the-art machine learning models on structured data

- Dynamically detect and translate between languages

Datawrapper

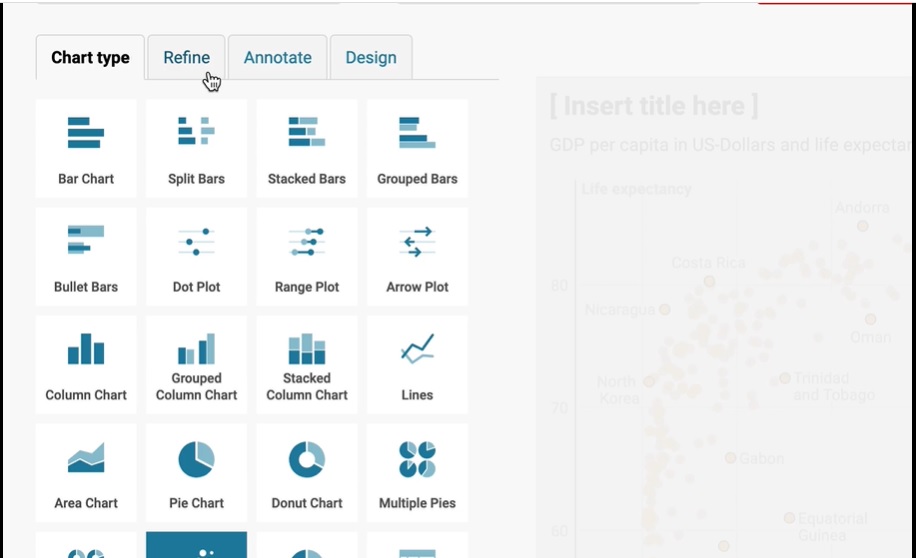

Datawrapper is an open-source web tool that allows the creation of basic interactive charts. Datawrapper requires you to load your CSV dataset to create pie charts, line charts, bar charts (horizontal and vertical), and maps that can be easily embedded onto a website.

Features

- Datawrapper does not require any design or programming knowledge

- To work with Datawrapper you only need your data and that’s it

- Datawrapper takes care of choosing an inclusive color palette

- Select multiple charts and map types and insert annotations

Fusioo

Fusioo is a collaborative online database that helps teams to build their custom database. It allows users to collect, track and manage their data for numerous applications like project management, Customer Relationship Management (CRM), task assignment, workload management, or issue tracking without writing any codes.

Features

- API

- Access Controls/Permissions

- Alerts/Notifications

- Application Management

- Client Portal

- Collaboration Tools

- Configurable Workflow

- Customer Database

- Dashboard Creation

- Data Import/Export

- Data Storage Management

- Data Visualization

Google’s Prediction API

Google Prediction API offers machine learning as a service. It learns from the user training data and allows the users to train prediction models and execute queries against them. It can predict a numeric or categorical value derived from the data provided in the user training data. It involves the following machine learning methods –

- Association Model

- Clustering Model

- General Regression Model

- Mining Model

- Naïve Bayes Model

- Neural network

- Regression model

- Rule Set Model

- Sequence model

- Support Vector Machine Model

- Text Model

- Time Series Model

- Tree Model

Features

- Predicts future trends from historical data sets

- Detects spam emails

- Recommends products/services basis user’s interests

- Detect smartphone activities of users

- Identify defaulters basis their credit history

KNIME

Knime or Konstanz Information Miner is a tool for massive data processing. It is being used mainly for the analysis of organizational Big Data. It is built on the Eclipse platform and it is extremely flexible and powerful.

Features

- Creates visual workflows: intuitive, drag-and-drop graphical interface

- Combines tools from different domains with native KNIME nodes in a single workflow, including writing in R & Python, machine learning, or connectors to Apache Spark.

- Numerous highly intuitive and easy-to-implement data manipulation tools

- Well documented and stepwise workflow

- Application optimized enough to handle large volumes of data comfortably

- Access to a public repository within the application that allows you to view hundreds of examples with datasets

IBM Watson Studio

Watson is an artificial intelligence platform by IBM that will allow you to incorporate AI tools into your data, regardless of where it is hosted, be it on IBM Cloud, Azure or AWS. It is a data governance integrated platform that helps to easily find, prepare, understand and use the information. You can obtain and classify important data, such as keywords, feelings, emotions, and semantic roles, from texts and conversations.

Features

- AutoAI for faster experimentation

- Advanced data refinery

- Open source notebook support

- Integrated visual tooling

- Model training and development

- Extensive open-source frameworks

- Embedded decision optimization

- Model management and monitoring

- Model risk management

In conclusion, these machine learning and data science tools will help non-programmers to manage their unstructured and raw data, and draw correct conclusions. To sharpen your data science skills further, you can take up any relevant data science e-course.

Courses on Data Science Tools

- Mastering Data Science and Machine Learning Fundamentals

- Data Science: Machine Learning

- Programming for Data Science with Python

- Machine Learning

- Machine Learning for Data Science and Analytics

- Google Cloud Platform Big Data and Machine Learning Fundamentals

- Python Basics for Data Science

FAQs

How should I pursue a career in Machine Learning?

To pursue a career in machine learning, you need to be equally skilful in any programming language like Python or R, mathematics, data analysis tools and database management. Usually, it is advisable to start learning the fundamentals of data analysis with a programming language, then gradually move into machine learning.

Which are the Top Platforms to Learn Data Science and Machine Learning?

Some popular platforms are Coursera, Udemy, edX, Kaggle, DataCamp, Udacity, Edureka, etc.

What are the best resources to learn how to use Python for Machine Learning and Data Science?

You can learn Python through tutorials that cover concepts from beginner to advanced levels. Multiple eLearning portals provide such tutorials. You can learn Python from Books, tutorials, from MOOCs, from Paid classroom courses, from YouTube and also from live Applications. However, you cannot become a good data scientist by just learning Python. You should master Data Science with Python which covers programming with Python, Database Technologies, a good hold on Mathematics and Statistical principles as well as Machine Learning with Python. You should also be good with Information Retrieval

Rashmi is a postgraduate in Biotechnology with a flair for research-oriented work and has an experience of over 13 years in content creation and social media handling. She has a diversified writing portfolio and aim... Read Full Bio