All that You Need to Know About Logistic Regression

Logistic Regression is a supervised machine-learning model that is used for classification problems. By classification, we mean that this model allows us to classify a set of input variables or input features into target values. In this article, we will learn logistic regression, why it is called regression, its types and finally will discuss an example to know how it can be implemented in a machine learning model.

It is so cool to predict the future! We can do the same if we have the right set of past data, which is also known as the ground truth. Suppose you have data on temperature, humidity, wind, and cloud for a location, and you want to predict if it will rain in the upcoming few days. These parameters of temperature, humidity, wind, and cloud are your independent variables, and rain is the dependent variable. Also, it is a classification problem since you will predict if it will rain or not, meaning the outcome will be either 0 or 1. All the independent variables that you are using are continuous in nature. Hence, there must be some way to classify a probabilistic outcome based on a set of continuous independent variables. It is where we use Logistic Regression.

Logistic Regression is a part of the Supervised Machine Learning model, where we provide the inputs and their corresponding outputs while training. Let us learn about Logistic Regression in detail.

Table of Content

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What is Logistic Regression

Logistic Regression is a supervised machine-learning model that is used for classification problems. By classification, we mean that this model allows us to classify a set of input variables or input features into target values. In the case of logistic regression, the output is a probabilistic value that lies in the range of 0 to 1. It can take continuous and discrete input datasets and yield a probabilistic value for output variable/s or target variable/s, which can have any values lying within 0 to 1.

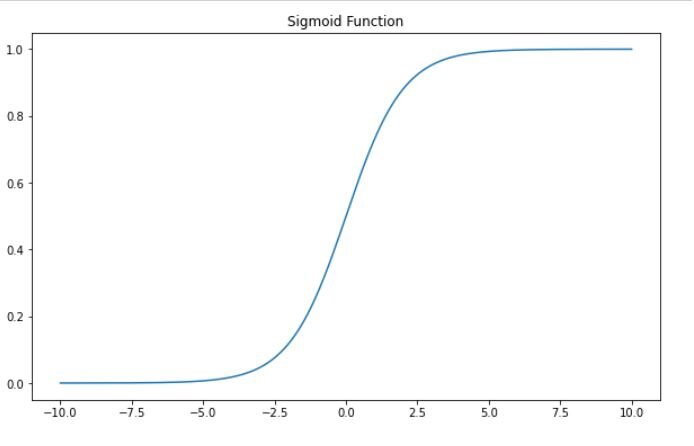

For classifying this range of output values, Logistic regression uses an S-shaped logistic function (also called the Sigmoid function). Here, we need to set a threshold value that generally equals 0.5. So any output probabilistic value coming below 0.5 is marked as 0, and any value lying between 0.5 to 1 is marked as 1. This is how we classify using Logistic Regression.

This is what the Sigmoid function looks like –

Must Check: All About Sigmoid Function

The maximum value a logistic function can have is 1, and the minimum value it can have is 0, giving it the shape of S. Logistic Regression comes with assumptions that the dependent variable must be categorical of nature and the independent variables must not exhibit multi-collinearity.

Why Is It Called Regression?

It is difficult to predict a discrete variable using the Linear Regression model. Suppose you want to predict if the mail received should be classified as spam or not. You will end up with a graph like this –

Thus we can see that the line does not fit the data points. If we have an outlier in our dataset, then the prediction line or the best fit line will get shifted considerably. It will look something like this –

It is marking some of the not-spam as spam, which is incorrect. Hence, we need to devise some way that can make the correct prediction. Logistic Regression comes to the savior at this point. Logistic Regression can deal with the outliers much well. The function of the logistic Regression is given as –

sigma(t) = 1/1+e-t

Here the t is the linear function that we commonly see in linear regression. that is t = c + MX. This linear function of t is placed in the sigma function which then converts its value into a value lying within the range of 0 to 1. So, no matter what the outlier value is or how many outliers are there, the sigmoid function will convert it to the range of 0 to 1. Therefore, it’s called Logistic Regression, which is converting the regression problem through a logit function (sigmoid function).

As per the equation t = c + MX, if the value of the independent variable X is higher, then the value of t will also be higher, keeping M and c as constants. Thus, e will be raised to the power of the higher negative, which will yield a smaller value and 1 divided by a smaller value will tend to give a higher value towards 1. Thus, according to this concept, for lower values of t, we will get lower values for the sigmoid function, that is these values will approach 0. Hence, we get an S shape.

Must Read: Regression Analysis in Machine Learning

Must Read: How to Improve Accuracy of Regression Model

Types Of Logistic Regression

There are three types of Logistic Regression based on the predictor variable –

- Binomial – When the predictor variable can have only two possible values – either 0 or 1, yes or no, true or false, etc. For example, a logistic regression classifying a mail as spam or not is a binomial.

- Multinomial – When the prediction variable has more than two possible values, and the values are not in any order. For example, a logistic regression classifies fruits on the basis of their characteristics. There can be many fruits, and none of it needs to be in any order.

- Ordinal – When the predictor variable has more than two possible values, and the values are in order. For example, a logistic regression classifies a disease as highly dangerous, dangerous, moderate, and low risk. So there are multiple classifying values, and they are in the order of the risk.

| Programming Online Courses and Certification | Python Online Courses and Certifications |

| Data Science Online Courses and Certifications | Machine Learning Online Courses and Certifications |

Code For Logistic Regression

Let us dive deeper into Logistic Regression implementation. We will develop a project with the famous titanic dataset and we will predict the survival of a group of persons. So basically, we are going to classify who will survive and who will not be based on several independent input features. Let us start coding –

Importing all the necessary libraries we will use –

import numpy as npimport pandas as pdimport seaborn as snsfrom sklearn.linear_model import LogisticRegressionfrom sklearn.metrics import accuracy_score

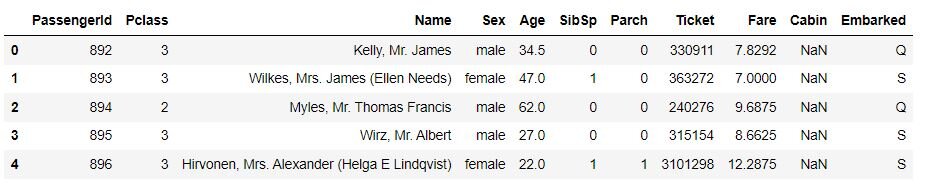

Importing the titanic dataset. Here, we have divided the dataset into a training dataset (which is in train.csv) and a testing dataset (which is in test.csv). We will build and validate our model on the basis of the train.csv file, which is having the prediction variable. We will then test our model with the unseen dataset from test.csv, which is not having the prediction variable. Let us look into the data for a clearer understanding –

dataset = pd.read_csv('train.csv')dataset.head()

testset = pd.read_csv('test.csv')testset.head()

Thus, the column named Survived in the dataset data frame is our target variable and this column is not present in the test set data frame.

Let’s check the shape of the dataset and test set –

dataset.shapeOutput – (891,12)testset.shapeOutput – (418,11)

So we can see that the dataset is having 12 columns and testset is having 11 columns. The column of Survived, which is our prediction variable, is not there in the testset.

Let’s drop some of the unwanted columns from both the dataframes –

dataset.drop(['Name','Ticket','Pclass','Embarked','Cabin','PassengerId'],inplace=True,axis=1)testset.drop(['Name','Ticket','Pclass','Embarked','Cabin','PassengerId'],inplace=True,axis=1)

Now, let’s check the survival rate as per gender,

sns.countplot(x ='Survived',hue = 'Sex',data = dataset);

Thus, Females are having more survival rate and males are having more death rates.

As gender is given as a categorical variable, we will convert it into numbers for both datasets –

sex = {'male':0, 'female':1}dataset.Sex = dataset.Sex.replace(sex)testset.Sex = testset.Sex.replace(sex)

Now we will check for null value presence and will remove if any –

dataset.info()

So Age column is having some missing values. We will replace those missing values with the average value for age and convert the data type and values to an integer.

dataset.Age = dataset.Age.fillna(dataset.Age.mean()).astype(int)Converting Fare column to integer –dataset.Fare = dataset.Fare.astype(int)

#Repeating the similar steps for testset –testset.Age = testset.Age.fillna(testset.Age.mean()).astype(int)testset.Fare = testset.Fare.astype(int)testset.Fare = testset.Fare.fillna(0)

#Now we will remove the column Survived from training data –x_train = dataset.drop(['Survived'], axis = 1)

#So, x_train is having all the columns except Survived.#We will assign Survived column to the y_train, which is our predictor variable.y_train = dataset['Survived']

#Now we will split the dataset into training and testing dataset. #Here the testing dataset is for validation.x_train, x_test, y_train, y_test = train_test_split(x_train, y_train, test_size=0.20,random_state=0)

#Now we will create the model –model = LogisticRegression()

#Fitting the model –model.fit(x_train, y_train)

#Predicting the validated set –prediction = model.predict(x_test)

#Checking the accuracy of the model –accuracy_score(y_test, prediction)

Output – 0.776536312849162

Now let’s create another model for predicting the testset, which is not having Survived column. After making the prediction, we will combine the predicted Survived column with the testset –

lr = LogisticRegression()lr.fit(x_train,y_train)predictions_test = lr.predict(testset)test_dataset = pd.DataFrame({'Survived':predictions_test, 'Age':testset['Age']})pd.merge(testset, test_dataset, on = 'Age')

This is what we get in our prediction –

Thus, our classification model is complete. This is how we can use Logistic Regression for classifying a set of data.

Summary

Logistic Regression is easy to learn and is yet a powerful model used in classification. This model is widely used in the medical sector for classifying disease and also for measuring its severity of it. With the help of observed data, which we call input features, we can get several insights into what the future may look like.

Logistic Regression is best in use for datasets where we get a lot of outliers in the input features. The model will converge all the outlier values in the range of 0 to 1, which is quite useful and powerful. The model is flexible as it can take both discrete and continuous sets of input features for predicting the target variable.

Contributed By: Nishi Paul

Top Trending Article

Top Online Python Compiler | How to Check if a Python String is Palindrome | Feature Selection Technique | Conditional Statement in Python | How to Find Armstrong Number in Python | Data Types in Python | How to Find Second Occurrence of Sub-String in Python String | For Loop in Python |Prime Number | Inheritance in Python | Validating Password using Python Regex | Python List |Market Basket Analysis in Python | Python Dictionary | Python While Loop | Python Split Function | Rock Paper Scissor Game in Python | Python String | How to Generate Random Number in Python | Python Program to Check Leap Year | Slicing in Python

Interview Questions

Data Science Interview Questions | Machine Learning Interview Questions | Statistics Interview Question | Coding Interview Questions | SQL Interview Questions | SQL Query Interview Questions | Data Engineering Interview Questions | Data Structure Interview Questions | Database Interview Questions | Data Modeling Interview Questions | Deep Learning Interview Questions |

This is a collection of insightful articles from domain experts in the fields of Cloud Computing, DevOps, AWS, Data Science, Machine Learning, AI, and Natural Language Processing. The range of topics caters to upski... Read Full Bio