How to Calculate the F1 Score in Machine Learning

f1 score is the evaluation metric that is used to evaluate the performance of the machine learning model. It uses both precision and Recall, that makes it best for unbalanced dataset. In this article, we will briefly cover what is f1 score, its formula, and example.

Once you are done with the model creation, the next step is to evaluate the model’s performance. In machine learning, there are different evaluation matrices, such as Cofusion Matrix, Accuracy, F1 score, AUC-ROC, Precision, and Recall. This article will discuss one such evaluation metric, i.e., the F-1 score.

Now, the important question is why we need an F1 score when we already have different evaluation matrices. F1-score becomes an important matrix for evaluating the machine learning model when we have an imbalanced dataset.

So, without further delay, let’s jump to what F1-Score is and how to calculate it.

Must Check: Evaluating a Machine Learning Algorithm

Must Check: How to Improve the Accuracy of the Regression Model

Table of Content

Best-suited Machine Learning courses for you

Learn Machine Learning with these high-rated online courses

What is the F1-Score?

F1-score is the evaluation matrix that combines two matrices: Precision and Recall, into a single metric by taking their harmonic mean.

In simple terms, the f1 score is the weighted average mean of Precision and Recall.

- The F1 score is used for information retrieval

- Most search engine uses it to find relevant result for the user in a short time

- It is also used in Natural Language Processing.

- Example: A machine learning model: Named Entity Recognition, identifies any personal name and address in the text.

F1 score Formula

Precision: It is the ratio of the number of True Positive to the sum of True Positive and False Positives. It refers to how close the measured value is to each other.

- Precision = True Positive (TP) / True Positive (TP) + False Positive (FP)

Recall: It is the ratio of the number of True Positive to the sum of True Positive and False Negatives. It represents the model’s ability to find all the relevant cases in a dataset.

- Recall = True Positive (TP) / True Positive (FP) + False Negative (FN)

Note:

- The value of the F1 score ranges from 0 to 1.

- 0: Worst Case

- 1: Best Case

- It takes both false positives and false negatives as it is a weighted average of precision and recall.

- The F1 score of a model will be high/ low if both Precision and Recall have high/low values.

- The F1 score will be medium if one of the values (either Precision or Recall) is low and the other has a high value.

| ROC-AUC vs. Accuracy | Accuracy vs. Precision |

| Sensitivity vs. Specificity | r-squared vs. adjusted r-squared |

Now, let’s have some examples to get a better understanding.

Examples

Example – 1: Consider the following confusion matrix, and find the related f1 score.

Answer – 1: To calculate the f1 score, firstly we will calculate the values of Precision and Recall.

Precision = TP/ (TP + FP) = 560560 + 60 = 0.903

Recall =TP / (TP + FN) = 560560 + 50=0.918

Now, the f1 score will be:

In the above example, we have considered the case of a binary classifier; now we will take the example of the multiclass classifier.

Example – 2: Let’s consider the below multiclass confusion matrix with 200 samples.

Answer – 2:

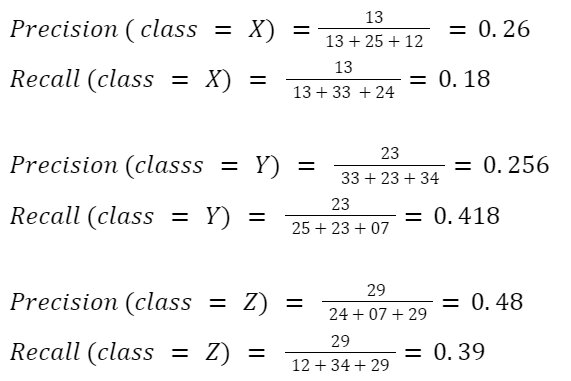

Firstly, we will find the value of precision and recall for different classes.

Now, we can easily calculate the value of the f1 score over different classes:

If you are interested to know how the f1 score is implemented in python over a dataset, check our blog: Precision and Recall.

| Programming Online Courses and Certification | Python Online Courses and Certifications |

| Data Science Online Courses and Certifications | Machine Learning Online Courses and Certifications |

Conclusion

f1 score is the evaluation metric that is used to evaluate the performance of the machine learning model. It uses both precision and Recall, that makes it best for unbalanced dataset. In this article, we have briefly covered what is f1 score, its formula, and example.

Hope this article will clear all your doubts related to f1 score.

Top Trending Article

Top Online Python Compiler | How to Check if a Python String is Palindrome | Feature Selection Technique | Conditional Statement in Python | How to Find Armstrong Number in Python | Data Types in Python | How to Find Second Occurrence of Sub-String in Python String | For Loop in Python |Prime Number | Inheritance in Python | Validating Password using Python Regex | Python List |Market Basket Analysis in Python | Python Dictionary | Python While Loop | Python Split Function | Rock Paper Scissor Game in Python | Python String | How to Generate Random Number in Python | Python Program to Check Leap Year | Slicing in Python

Interview Questions

Data Science Interview Questions | Machine Learning Interview Questions | Statistics Interview Question | Coding Interview Questions | SQL Interview Questions | SQL Query Interview Questions | Data Engineering Interview Questions | Data Structure Interview Questions | Database Interview Questions | Data Modeling Interview Questions | Deep Learning Interview Questions |

FAQs

What is F1 score?

F1-score is the evaluation matrix that combines two matrices: Precision and Recall, into a single metric by taking their harmonic mean. In simple terms, the f1 score is the weighted average mean of Precision and Recall.

How to calculate the F1 score?

F1 score is the weighted average mean of Precision and Recall. The mathematical formula of F1 score is: (2* Precision * Recall) / (Precision + Recall)

How F1 score is better than accuracy?

Accuracy is the ratio of correctly classified instances to the number of instances. It is a useful metric for evaluating the classification models but it can be misleading where the classes are imbalanced. In contrast, the F1 score is the weighted average mean of precision and recall. It is useful for the situation where the classes are imbalanced.

What are the limitations of f1 score?

It is advised not to use the f1 score where False Positive is equal to False Negative. F1 score is also not used in the situation where one class dominates the dataset, as it will be heavily weighted in the calculation.