Web Crawler – What it is and How Does it Work?

The internet is a vast and ever-expanding place, with hundreds of millions of pages of data available at the click of a button. Various search engines, such as Google, Yahoo, etc., help us find the data we need. But have you ever wondered that how exactly they are able to accomplish this or how they figure out where to look? The answer to these queries is “Web Crawler.”

But what exactly is a web crawler, and how does it work? Answers to such queries will be given in this article, where we will cover all there is to know about web crawlers. But, before we begin diving deeper, let’s quickly look at the list of topics listed under the table of contents that we will cover in this article.

Table of Contents (TOC)

- What is a Web Crawler?

- How Does a Web Crawler Work?

- Web Crawler Examples

- Top Ten Web Crawler Tools

- Why Web Crawlers Matter for SEO

- How do web crawlers handle different types of content, such as images and videos?

- Conclusion

What is a Web Crawler?

We can define web crawler as a bot that searches and indexes content on the internet.

In a layman’s term, a web crawler is a type of bot or program that visits websites and reads the content on their pages in order to create a searchable index for a search engine. Web crawler is also commonly known as web spider.

You can also explore, these articles:

Various search engines, such as Google, Yahoo, Bing, etc., use web crawlers in order to build their index of the web and to keep it updated. A web crawler also helps to discover new pages and websites, monitor the availability and performance of websites, discover broken links, gather data for market research, and much more.

If you don’t want your website to be found by search engines, you can tell web crawlers not to crawl it. You can upload a robots.txt file to accomplish this (Robot.txt file is a file that tells a search engine how to crawl and index the pages on your site.)

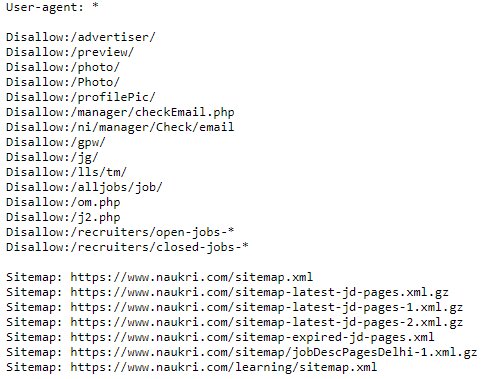

For a better understanding, consider the following Naukri.com/robots.txt file example:

Note: A sitemap is an XML file that contains a list of all the pages on a website that you want robots or a bot to find and access.

You can also explore: What is MAN Network? Advantages and Disadvantages

Best-suited Networking courses for you

Learn Networking with these high-rated online courses

How Does a Web Crawler Work?

Web crawlers work by following a set of steps to visit and index the pages on a website. Here’s a high-level overview of the steps that web crawlers typically follows:

- The web crawler starts with a list of URLs, known as seed URLs, that it should visit first. These seed URLs are typically provided by the owner of the website or by a search engine.

- The web crawler retrieves the HTML code of the first seed URL and evaluates it to find any links to other pages on the website.

- As the web crawler visits each page, it indexes the content of that page, such as the text, images, and videos, so that it can be searched later on. It also adds the URLs of the pages it finds to a queue of URLs to visit next.

- Web crawlers continue to visit each URL in the queue, retrieving the HTML code, evaluating it, indexing the content, and adding any new URLs to the queue.

- The web crawler will be stop at some point, it could be a time limit or the queue getting empty, then the URLs and the indexed content are stored in a database.

- Web crawlers can then be used to search for specific keywords or phrases in the indexed content, or to create a searchable index for a search engine.

- Periodically the web crawler will revisit the website to look for updates and new pages, so the indexed information stay up-to-date.

You can also explore: What are the Different Types of Network Topology?

Web Crawler Examples

Here are some of the most popular web crawlers:

- Yahoo (Yahoo)

- Bingbot (Bing)

- Googlebot (Google)

- Amazonbot (Amazon)

- DuckDuckBot (DuckDuckGo)

Top Ten Web Crawler Tools

Here is the list of the top ten web crawler tools:

- Octoparse

- 80legs

- Parsehub

- Spinn3r

- Scraper

- VisualScraper

- Scrapinghub

- WebHarvy

- Helium Scraper

- HTTrack

Why Web Crawlers Matter for SEO

Web crawlers play a crucial role in search engine optimization (SEO). Here are some of the reasons:

- Indexing: Search engines use web crawlers to discover and index new web pages. Without web crawlers, search engines would not be able to discover and index new pages, and they would not be included in search engine results pages (SERPs).

- Analyzing content: Web crawlers analyze the content of web pages to understand their relevance and value. Various search engines use this information to determine how relevant a page is to a specific query and to rank it in SERPs.

- Identifying technical issues: Web crawlers can also help search engines identify technical issues on a website, such as broken links, duplicate content, slow page load times, etc.

You can also explore: SEO Checklist to Maintain a High Ranking on Google

Website owners can optimize their sites to be more easily crawled and understood by search engines, thus achieving better visibility in SERPs. However, you should remember that web crawlers are only one of many factors that search engines take into account when ranking a website in SERPs. Hence, having a web crawler-friendly website isn’t enough.

You can also explore: Importance of SEO For Top-Ranking Online Businesses

How do web crawlers handle different types of content, such as images and videos?

Web crawlers handle different types of content, such as images and videos, in different ways.

Let’s first cover how web crawlers handle images:

When web crawlers encounter an image on a webpage, it will download the image file. Once downloaded, it will extract any metadata associated with it, such as the file name, file size, dimensions, etc. Some web crawlers may also analyze the image using image recognition techniques to extract additional information, such as the text contained within an image, etc.

Let’s now explore how web crawlers handle videos:

When web crawlers encounter a video on a webpage, it will download the video file. Once downloaded, it will extract any metadata associated with it. The web crawler may also analyze the video to extract additional information such as the video format, resolution, etc.

Web crawlers may have different abilities and selections when handling different types of content. As a result, it is important to remember that not all web crawlers can handle all types of content.

For example, a simple web crawler might not be able to handle multimedia files or might ignore them. Hence, it is important to make sure that the web crawler you use has the appropriate capabilities for your needs.

Conclusion

In this article, we have explored all that there is to know about web crawlers. If you have any queries related to the topic, please feel free to send your queries to us through a comment. We will be happy to help.

Happy learning!!

Anshuman Singh is an accomplished content writer with over three years of experience specializing in cybersecurity, cloud computing, networking, and software testing. Known for his clear, concise, and informative wr... Read Full Bio